-

"Seven Databases in Seven Weeks: A Guide to Modern Databases and the NoSQL

Movement, 2nd Edition"

by Eric Redmond, and Jim Wilson -

"Fundamentals of Data Engineering"

by Joe Reis and Matt Housley -

"The Dashboard Effect: Transform Your Company"

by Jon Thompson and Brick Thompson

| Week | Topics | Assignment | Database |

|---|---|---|---|

| 1 (Aug 27, 29) | Unstructured and Evolving Data🔗 | Lab 1, HW 1 | IndexedDB |

| 2 (Sep 3, 5) | Data Modeling and Engineering 🔗 | ||

| 3 (Sep 10, 12) | Data Quality and Standards 🔗 | Lab 2, HW 2, Project Part 1: Data and Scalability | MongoDB |

| 4 (Sep 17, 19) | Data Ingestion and Processing 🔗 | ||

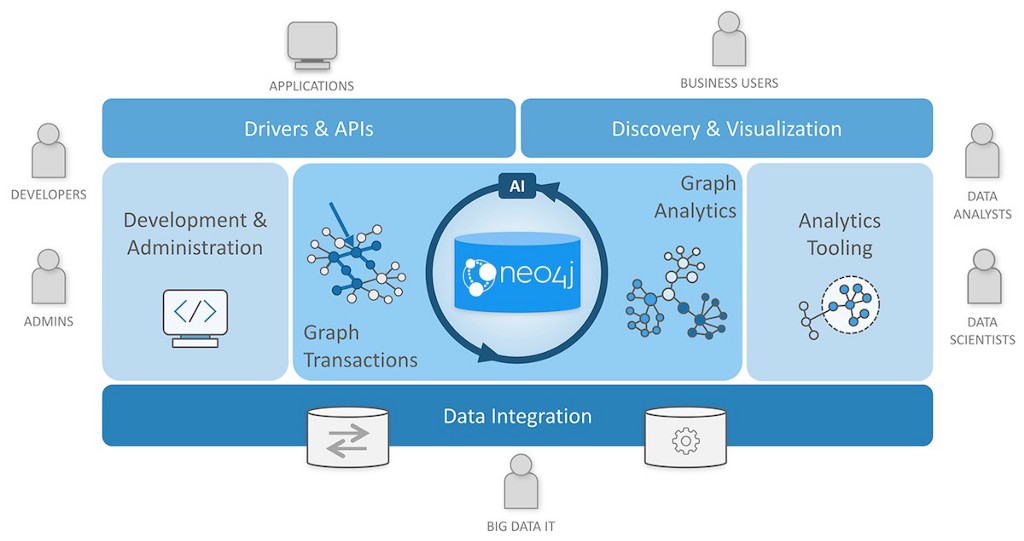

| 5 (Sep 24, 26) | Database Architecture 🔗 | Lab 3, HW 3 | Neo4j |

| 6 (Oct 1, 3) | Database Dashboard 🔗 | ||

| 7 (Oct 8, 10) | Database and Data Security 🔗 | Lab 4, HW 4 | Redis |

| 8 (Oct 15, 17) | Midterm Preparation | Midterm | |

| 9 (Oct 22, 24) | Data Lakes, Data Warehouses & Secondary Data Usage 🔗 | ||

| 10 (Oct 29, 31) | Mid-Semester Review | ||

| 11 (Nov 5, 7) | Database Sharding and Partitioninge 🔗 | Lab 5, HW 5, Project Part 2: Data Lake, Warehouse, and Dashboard | |

| 12 (Nov 12, 14) | Database Migration from SQL to NoSQL 🔗 | ClickHouse | |

| 13 (Nov 19, 21) | Course Review | ||

| 14 (Nov 26) | Practice Finals Exam | ||

| 15 (Dec 3, 5) | Project Presenations | ||

| 16 (Dec 10, 12) | Finals Week |

Dec 10, 8:00 am - 11:00 am, Baun Hall 214 Dec 12, 7:00 pm - 10:00 pm, John T Chambers Technology Center 114 |

Syllabus

Textbooks

Office Hours

Monday - Friday from 1:00 PM to 3:00 PM, located at CTC 117

Grading Schema

- Homework: 15%

- Labs: 15%

- Project: 40%

- Midterm Exam: 15%

- Final Exam: 15%

Assignments and Exams

The course includes regular homework assignments, hands-on labs, a comprehensive project, a midterm, and a final exam

Course Assumptions

It is assumed that students have a basic understanding of databases and programming concepts. Familiarity with JavaScript and Python development is recommended

GitHub Repository

The course code and materials will be available on GitHub. Please clone the repository at https://github.com/SE4CPS/dms2.

Collaboration and AI Rules

Collaboration is encouraged on assignments, but all submitted work must be your own. The use of AI tools for generating code or assignments is allowed

Research Opportunities

Students interested in research can expand their course project into a research opportunity, particularly in the areas of NoSQL databases, big data, and data analytics.

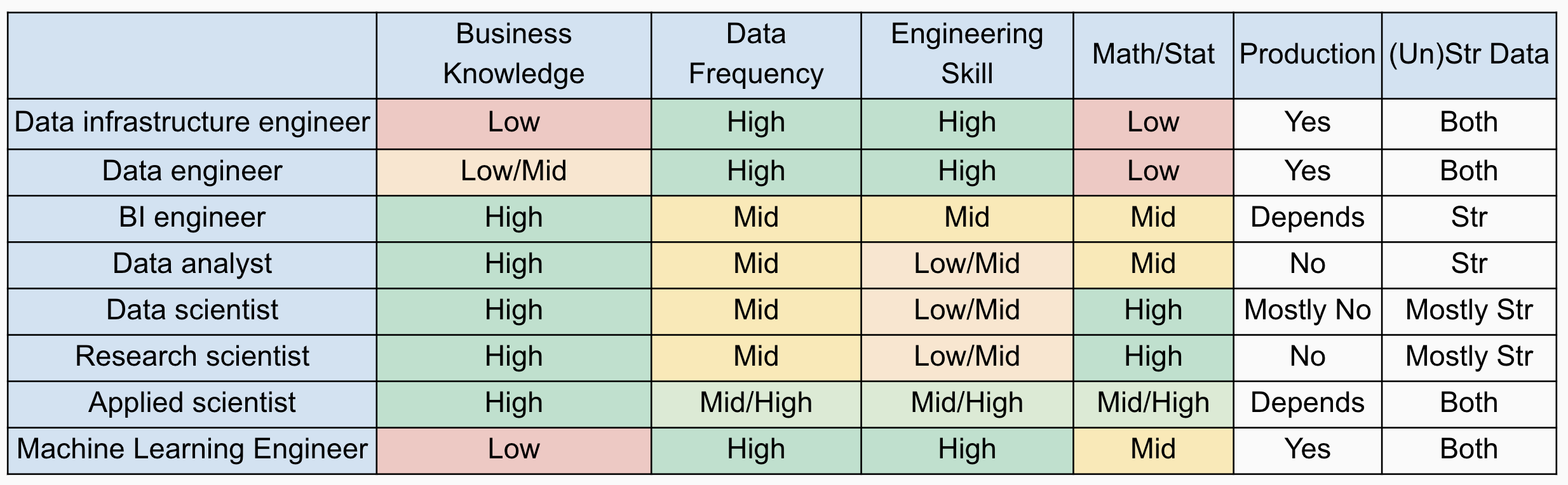

Course Goals 1/3

- Data Architect: Learned to examine database technologies for a specific application use case

- Data Engineer: Gained expertise in building data pipelines using NoSQL databases.

- Big Data Specialist: Gained experience in handling and processing large volumes of data using NoSQL technologies

- Database Administrator: Developed skills to manage, monitor, and optimize NoSQL databases

Course Goals 2/3

- Data Quality Specialist: Learned to define and implement data quality requirements.

- Data Scientist: Learned to work with unstructured data in NoSQL databases for advanced analytics

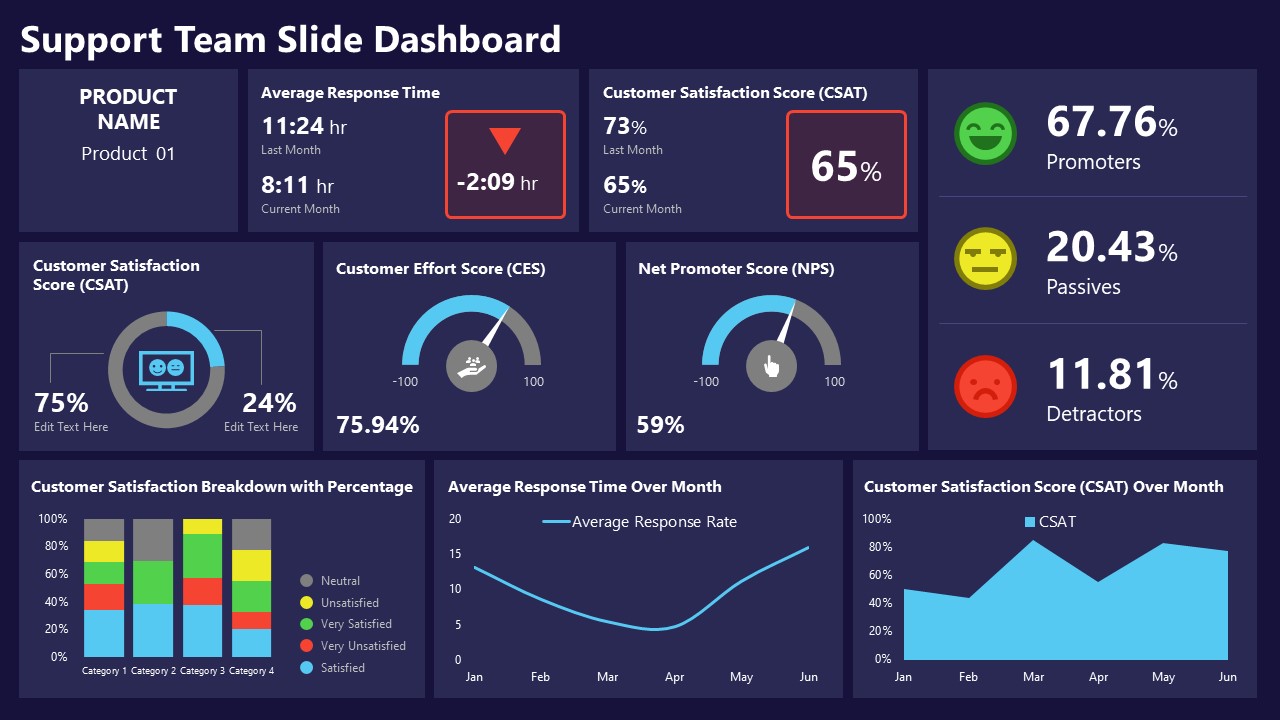

- Data Analyst: Learned to define KPIs and metrics relevant for dashboards

- BI Developer: Learned to integrate NoSQL databases into business intelligence tools for better decision-making

Course Goals 3/3

- Requirement: Effectively interact with domain stakeholders to examine data requirements.

- Strategic: Making architectural database and data decisions

- Operational: Ability to query NoSQL databases for specific requirements

Today

- What changed? Why DMS 2?

- Why is data unstructured and evolving?

- Why IndexedDB the Standard NoSQL Database for the Web?

Data Management Before 2000

- Limited Internet Connectivity: Isolated Systems

- Manual Data Entry: Transition from Paper Tables to SQL Tables

- Static Data Models: Predefined Schemas Before Data Entry

Early 2000s: Data Shift

- Connectivity: Rapid adoption of internet, NFC, BLE, and Wi-Fi;

- Human Data: Surge in real-time, user-generated data

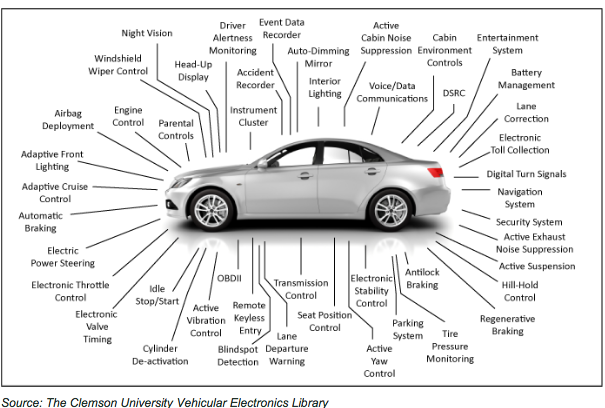

- Machine Data: Cheap transistors fueled machine-generated IoT data

- Mobile: Mobile devices; ubiquitous data access

Connectivity and Automation 🙂

- Healthcare: Medical record systems, telemedicine, and patient monitoring

- Agriculture: Precision farming, automated irrigation, and crop monitoring.

- Automotive: Autonomous driving systems, connected vehicles, and predictive maintenance

Connectivity and Automation 🤔

Connectivity and automation led to increased dependency on complex software systems across industries

Connectivity and Automation 😠

Software continuously updated independently of other dependent components.

Updating Relational Schemas

A consistent data model (SQL) is not realistic in complex software systems

Point of Sale System

A point of sale (POS) database should flexibly handle scanned data, adapting to changes in data structure without data schema update

Reading Data from QR Code

Product v1

{

"productID": "12345",

"name": "T-shirt",

"price": 19.99,

"quantity": 1

}

Product v2

{

"productID": "12345",

"name": "T-shirt",

"price": 19.99,

"quantity": 1,

"color": "Orange",

"size": "M"

}

Scaling Vehicle Log and Sensor Data

Scaling Vehicle Log and Sensor Data

{

"deviceID": "XYZ456",

"logSummary": {

"motionDetected": 145,

"doorOpened": 78,

"windowOpened": 54

},

"sensorReadings": {

"ABC123": {

"temperatureValues": 1200,

"humidityValues": 1150

},

"DEF456": {

"temperatureValues": 950,

"humidityValues": 900

},

"GHI789": {

"temperatureValues": 800,

"humidityValues": 850

}

}

}

Today

- What changed? Why DMS 2?

- Why is data unstructured and evolving?

- Why IndexedDB the Standard NoSQL Database for the Web?

Why is Data Unstructured and Evolving?

Today

- Why DMS 2? What changed?

- Why is data unstructured and evolving?

- Why IndexedDB the Standard NoSQL Database for the Web?

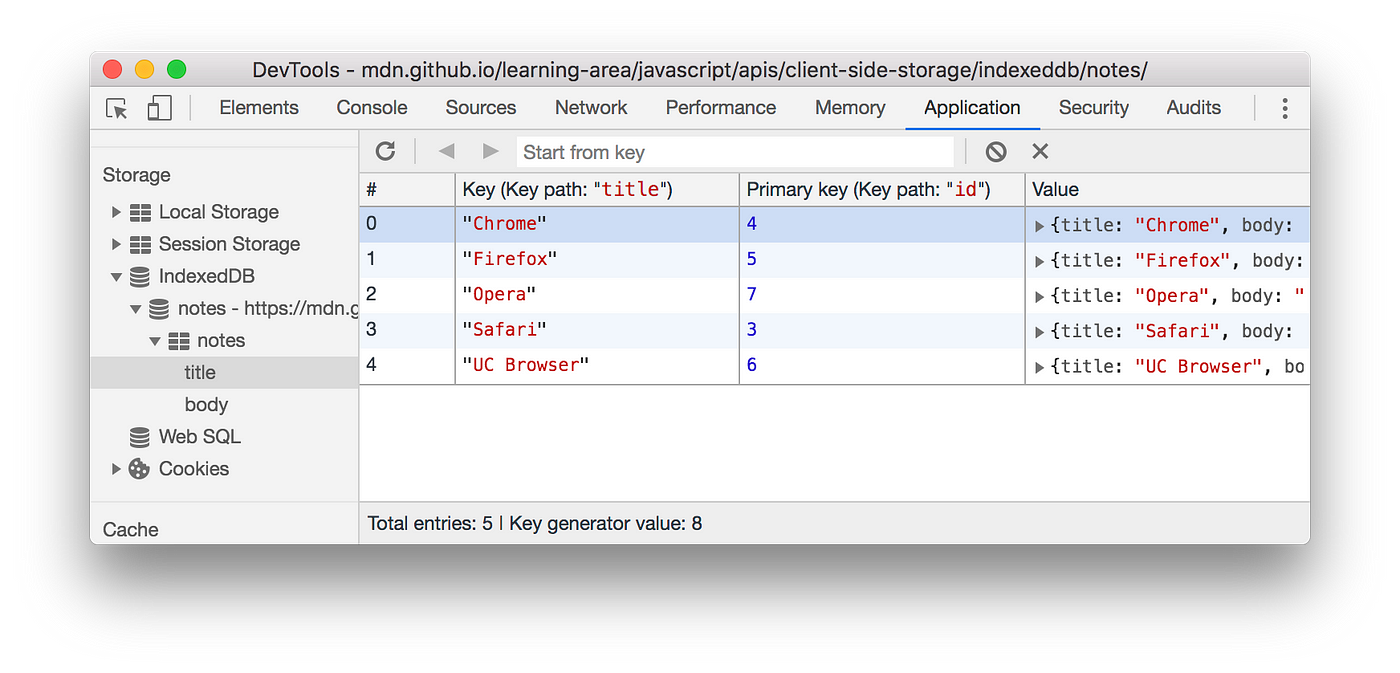

IndexedDB Timeline

- 2010: Introduction of IndexedDB by the W3C as a web standard for storing large unstructured data in the browser

- 2011: First implementations in major browsers, allowing offline web applications to store data locally

- 2014: IndexedDB 2.0 draft published and improve API for better performance.

- 2016: Broad adoption across major browsers, including Edge and Safari, for consistent support for web developers

- 2018: IndexedDB 2.0 officially recommended by the W3C

- 2020s: Ongoing improvements and optimizations

IndexedDB Resources

Prepare for Next Class: Reading

Please read the following material before our next class:

- Philosophy of NoSQL

- A Distributed Storage System for Structured Data (Abstract, Sections 1-2)

- Chapter: What is Data Engineering from "Fundamentals of Data Engineering: Plan and Build Robust Data Systems"

- Course Syllabus

Homework 1: SQL & NoSQL Review

Canvas Due Date: Tuesday, September 10

- Review the architectural differences between relational (SQL) and non-relational (NoSQL) databases

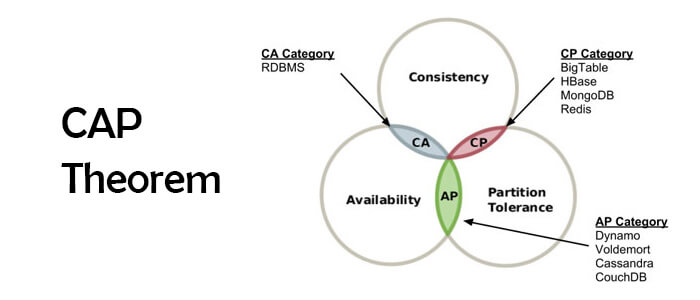

- Analyze the trade-offs between SQL's ACID properties and NoSQL's CAP theorem implications.

Lab 1: Agricultural Data Collection

Canvas Due Date: Tuesday, September 10

Lab 1: Agricultural Data Collection with IndexedDB

In this lab, you will develop a web application that collects and stores unstructured agricultural data using IndexedDB. You will implement functionality to handle various data types, including sensor readings, images, farmer notes, GPS coordinates, and timestamps

Lab 1: Submission Instructions

After completing the lab, follow these steps to submit your work:

- Create a new Git branch named lab1-firstname-lastname-XXXX (e.g., lab1-john-doe-1234).

- Push your code to the repository at https://github.com/SE4CPS/dms2

- Write five unit test functions to validate your data and include a screenshot of your browser console showing the retrieved data

- Submit the GitHub link and screenshot via the course's submission portal

Today

- Unstructured data

- IndexedDB Part 2

- Code Lab

Unstructured Data

- Data structure updates before table structure updates ✓

- Data values that cannot be structured in RDBS tables

Unstructured Data

Logs, Images, Videos, Text documents, Sensor data

Video Data

Video Data Sample:

--------------------

Frame 1:

[[34, 45, 56], [78, 89, 90], [23, 45, 67], ...]

Frame 2:

[[65, 75, 85], [123, 132, 140], [90, 100, 110], ...]

Frame 3:

[[12, 22, 32], [45, 50, 55], [34, 39, 43], ...]

...

Audio Data

Audio Data Sample (Waveform):

--------------------

[0.1, 0.3, -0.2, 0.5, -0.4, 0.8, -0.7, ...]

[0.2, 0.4, -0.3, 0.6, -0.5, 0.9, -0.8, ...]

...

Text Data

Text Data Sample:

--------------------

"The quick brown fox jumps over the lazy dog.

This sentence contains every letter of the alphabet."

"Data science is an interdisciplinary field that uses scientific methods,

processes, algorithms, and systems to extract knowledge from data."

Email Data

| Store | Entries |

|---|---|

|

Log Data

Log Data Sample:

--------------------

2024-08-28 12:45:23 INFO: User logged in - UserID: 12345

2024-08-28 13:02:10 WARNING: High memory usage detected

2024-08-28 14:15:45 ERROR: Unable to connect to the database

...

Logs Data Stores

| Store | Entries |

|---|---|

| Logs |

|

What is structured data?

- Data stored as free-form text, such as emails or social media posts

- Data organized in a predefined format, such as tables and columns

- Data generated by sensors, such as temperature readings

Which is unstructured data?

- A relational database storing customer information

- A JSON document representing a user profile

- A collection of log files generated by a web server

Which data is stored in NoSQL DBs?

- Tabular data in SQL databases

- Key-value pairs or document-based data like JSON

- Structured data with strict schema enforcement

Why unstructured data complex to manage?

- It requires a predefined schema before storage

- It lacks a predefined structure, making it harder to organize and query

- It is always stored in text files

Unstructured vs structured?

- 50% unstructured, 50% structured

- 80% unstructured, 20% structured

- 30% unstructured, 70% structured

- Data structure updates before table structure updates ✓

- Data values that cannot be structured in RDBMS tables ✓

Today

- Unstructured data ✓

- IndexedDB Part 2

- Code Lab

Why IndexedDB?

- Performance

- Comprehensive API

- ACID Compliance

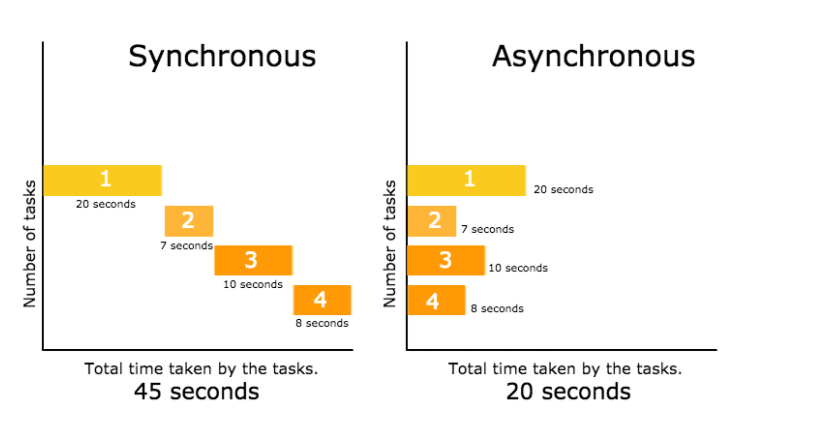

Performance via Asynchronous API

Performance via Asynchronous API

- Non-blocking operations keep the UI responsive

- Allows multiple requests to run simultaneously

- Prevents the browser from freezing during large data operations

let request = indexedDB.open("BookStoreDB", 1);

request.onsuccess = function(event) {

let db = event.target.result;

let transaction = db.transaction("Books", "readwrite");

let store = transaction.objectStore("Books");

store.add({ title: "JavaScript Basics", author: "John Doe" });

};

console.log("next Instruction...");

Performance via Asynchronous API

- Improves performance for data-intensive applications

- Enables smooth user experience without lag

- Handles large datasets effectively

Fetching all books in the background while the user continues browsing

let transaction = db.transaction("Books", "readonly");

let store = transaction.objectStore("Books");

let request = store.getAll();

request.onsuccess = function(event) {

console.log(event.target.result);

};

console.log("next Instruction...");

Performance via Indexing

- Fast data retrieval by creating indexes on frequently queried fields

- Reduces the time complexity of search operations

- Improves performance when dealing with large datasets

let request = indexedDB.open("BookStoreDB", 1);

request.onupgradeneeded = function(event) {

let db = event.target.result;

let store = db.createObjectStore("Books", { keyPath: "id", autoIncrement: true });

store.createIndex("authorIndex", "author", { unique: false });

};

Performance via Indexing

- Fast retrieve all books by a specific author

- Efficiently search and filter data

- Minimizes the overhead of scanning the entire database

let transaction = db.transaction("Books", "readonly");

let store = transaction.objectStore("Books");

let index = store.index("authorIndex");

let request = index.getAll("John Doe");

request.onsuccess = function(event) {

console.log(event.target.result);

};

Performance via Parallel Reads

let transaction = db.transaction("Books", "readonly");

let store = transaction.objectStore("Books");

let request1 = store.get(1);

let request2 = store.get(2);

request1.onsuccess = function(event) {

console.log("Book 1:", event.target.result);

};

request2.onsuccess = function(event) {

console.log("Book 2:", event.target.result);

};

Performance via No Schema Verification

- Faster data storage and retrieval as no schema validation is required

- Less overhead, especially for large datasets or frequently updated data

Why IndexedDB?

- Performance ✓

- Comprehensive API

- ACID Compliance

Comprehensive API (1/4)

| SQL Query | IndexedDB API |

|---|---|

| CREATE TABLE Books (id INT, title TEXT, author TEXT); | db.createObjectStore("Books", { keyPath: "id", autoIncrement: true }); |

| INSERT INTO Books (title, author) VALUES ('Book Title', 'Author'); | store.add({ title: 'Book Title', author: 'Author' }); |

| SELECT * FROM Books; | store.getAll(); |

| SELECT * FROM Books WHERE id = 1; | store.get(1); |

Comprehensive API (2/4)

| SQL Query | IndexedDB API |

|---|---|

| UPDATE Books SET author = 'New Author' WHERE id = 1; | let record = store.get(1); record.author = 'New Author'; store.put(record); |

| DELETE FROM Books WHERE id = 1; | store.delete(1); |

| CREATE INDEX ON Books (author); | store.createIndex("authorIndex", "author", { unique: false }); |

| SELECT * FROM Books WHERE author = 'Author'; | let index = store.index("authorIndex"); index.getAll("Author"); |

Comprehensive API (3/4)

| SQL Query | IndexedDB API |

|---|---|

| SELECT COUNT(*) FROM Books; | store.count(); |

| DELETE FROM Books; | store.clear(); |

| DROP TABLE Books; | db.deleteObjectStore("Books"); |

| ALTER TABLE Books ADD COLUMN published_date DATE; | No direct equivalent; modify existing records to add new field |

Comprehensive API (4/4)

| SQL Query | IndexedDB API |

|---|---|

| BEGIN TRANSACTION; | let transaction = db.transaction(["Books"], "readwrite"); |

| COMMIT; | transaction.oncomplete = function() { ... } |

| ROLLBACK; | transaction.abort(); |

| SELECT * FROM Books ORDER BY title; | let cursor = store.openCursor(); cursor.continue(); |

| ERROR HANDLING; | transaction.onerror = function(event) { console.error(event.target.errorCode); }; |

Limitations in IndexedDB API

- JOIN operations across multiple object stores

- Aggregations like SUM, AVG, MIN, MAX

- Complex WHERE clauses with AND, OR, NOT

- Subqueries (e.g., SELECT * FROM (SELECT ...))

- GROUP BY and HAVING clauses

- ALTER TABLE to modify schema after creation

- Full-text search within fields

- Triggers and stored procedures

Why IndexedDB?

- Performance ✓

- Comprehensive API ✓

- ACID Compliance

ACID Compliance of IndexedDB

| ACID Property | IndexedDB Compliance |

|---|---|

| Atomicity | Supported. Transactions are atomic, meaning all operations within a transaction are completed successfully, or none are applied. If an error occurs, the transaction can be aborted |

| Consistency | Partially. While IndexedDB does not enforce a schema, it maintains consistency within the data by ensuring that transactions either fully succeed or fail, preventing partial updates |

| Isolation | Partial. Transactions are isolated to an extent, meaning data changes in a transaction are not visible to other transactions until the transaction is complete. However, there is no strict locking mechanism as in traditional RDBMS |

| Durability | Supported. Once a transaction is committed, the data is guaranteed to be saved, even if the system crashes immediately afterward. Data is stored persistently in the browser |

Why IndexedDB?

- Performance ✓

- Comprehensive API ✓

- ACID Compliance ✓

Today

- Unstructured data ✓

- IndexedDB Part 2 ✓

- Code Lab

Code Lab

DocsToday

- Unstructured data ✓

- IndexedDB Part 2 ✓

- Code Lab ✓

Reminder

Please review the syllabus, homework, lab, and reading list

Data Modeling and Engineering

Today

- Data Modeling

- Data Engineering

- Questions Assignment 1

- Glossary and Terminology

Which of the following statements is true about IndexedDB?

- A) IndexedDB is a NoSQL database built into the browser, allowing for storage of significant amounts of structured data

- B) IndexedDB does not support transactions, making it unsuitable for complex operations.

- C) IndexedDB only supports string data types and cannot store objects

- D) IndexedDB can only be used in server-side applications and not in the browser

Which code snippets creates an object store in IndexedDB?

A)

var request = indexedDB.open('MyDatabase', 1);

request.onupgradeneeded = function(event) { // when created or updated

var db = event.target.result;

db.createObjectStore('MyObjectStore', { keyPath: 'id' });

};

B)

var db = new IndexedDB('MyDatabase');

db.createStore('MyObjectStore', 'id');

What happens if a database is created twice in IndexedDB with the same name but different version numbers?

A) The database is re-created and any existing data is overwritten

B) The database is ignored and no changes are made

C) The database is upgraded, and the

onupgradeneeded event is triggered.

D) The database is deleted and recreated from scratch.

What happens if a database is created twice in IndexedDB with the same name and the same version number?

A) The database is re-created and any existing data is overwritten

B) The database is ignored and no changes are made

C) The database is upgraded, and the

onupgradeneeded event is triggered.

D) The database is deleted and recreated from scratch.

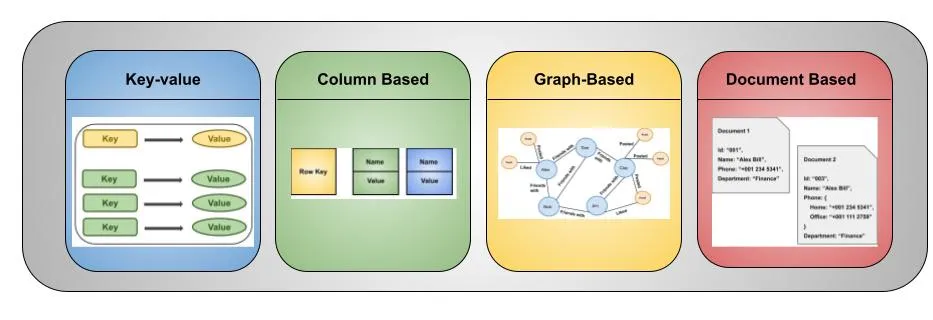

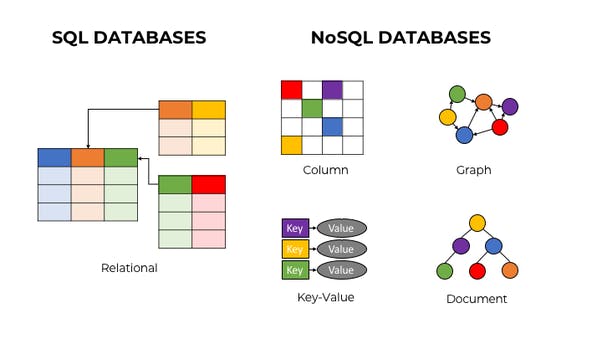

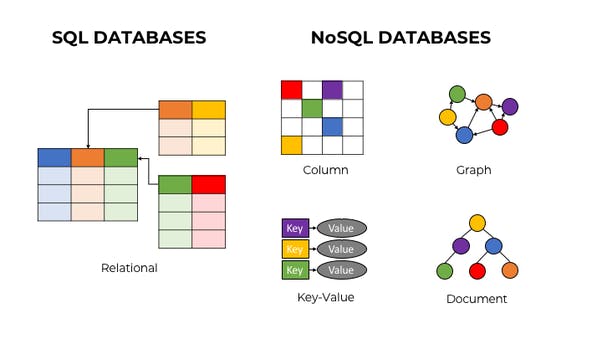

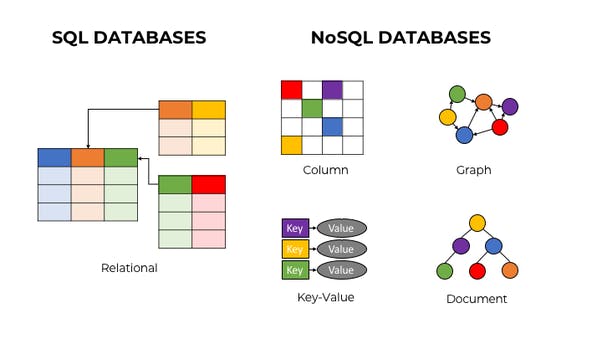

Data Modeling: SQL vs. NoSQL

- SQL: Structured schemas with tables, rows, and columns

- NoSQL: Flexible schema with key-value pairs for quick lookups

Data Modeling: SQL vs. NoSQL

- SQL: Models relationships between entities

- NoSQL: Models relationships within a single entity

SQL Example: Crops Data

-- Create table for crops and farms

CREATE TABLE Crops (

CropID INT PRIMARY KEY,

CropName VARCHAR(50),

PlantingDate DATE,

HarvestDate DATE,

FarmID INT

);

-- Insert crop data

INSERT INTO Crops VALUES

(1, 'Wheat', '2024-03-15', '2024-08-10', 1),

(2, 'Corn', '2024-04-01', '2024-09-15', 2);

NoSQL Example: Crops Data

{

"Farm:1": {

"Crops": [

{"CropID": "1", "CropName": "Wheat", "PlantingDate": "2024-03-15"}

]

},

"Farm:2": {

"Crops": [

{"CropID": "2", "CropName": "Corn", "PlantingDate": "2024-04-01"}

]

}

}

NoSQL Example: Real-Time Sensor Data

{

"Sensor:101": {

"Type": "Soil Moisture",

"Location": "Field A",

"LastReading": "2024-09-02T12:30Z",

"Value": "35%"

}

}

Querying Data

SQL Querying

SQL databases use a structured query language (SQL) for complex queries, enabling

powerful filtering, joining, and aggregation capabilities

Process:

- SQL Query → Returns Structured Data

NoSQL Querying

NoSQL databases use flexible key-based lookups and simple filtering. Queries

typically

return JSON values and may require additional processing with JavaScript

Process:

- NoSQL Query → Returns JSON Data

- JavaScript Query → Additional Processing

- Result → Processed Data Output

⚠️ Warning: The schema of the JSON data must be known for accurate

processing and aggregation. Since NoSQL schemas are flexible and not enforced,

ensure

proper schema knowledge for consistent data handling.

SQL Querying

SQL databases use a structured query language (SQL) for complex queries, enabling powerful filtering, joining, and aggregation capabilities

Process:

- SQL Query → Returns Structured Data

NoSQL Querying

NoSQL databases use flexible key-based lookups and simple filtering. Queries typically return JSON values and may require additional processing with JavaScript

Process:

- NoSQL Query → Returns JSON Data

- JavaScript Query → Additional Processing

- Result → Processed Data Output

⚠️ Warning: The schema of the JSON data must be known for accurate processing and aggregation. Since NoSQL schemas are flexible and not enforced, ensure proper schema knowledge for consistent data handling.

SQL vs. NoSQL Data Structures

SQL Query & Aggregation

-- Sample SQL Query

SELECT CropName, PlantingDate, HarvestDate

FROM Crops

WHERE FarmID = 1;

-- Average Harvest Duration Calculation

SELECT AVG(DATEDIFF(DAY, PlantingDate, HarvestDate)) AS AvgHarvestDays

FROM Crops

WHERE FarmID = 1;

Structured query language (SQL) allows direct querying and aggregation, such as

calculating averages

NoSQL Query & Aggregation

// JavaScript to query IndexedDB and calculate average harvest days

let db; // Assume `db` is an open IndexedDB instance

// Query to get data from IndexedDB

let transaction = db.transaction(['Crops'], 'readonly');

let objectStore = transaction.objectStore('Crops');

let request = objectStore.getAll();

request.onsuccess = function(event) {

let crops = event.target.result;

// Example of processing data to calculate average harvest days

let totalHarvestDays = crops.reduce((sum, crop) => {

let plantingDate = new Date(crop.PlantingDate);

let harvestDate = new Date(crop.HarvestDate);

let days = (harvestDate - plantingDate) / (1000 * 60 * 60 * 24);

return sum + days;

}, 0);

let avgHarvestDays = totalHarvestDays / crops.length;

console.log('Average Harvest Days:', avgHarvestDays);

};

NoSQL querying returns JSON data, which can be processed with JavaScript for

aggregations

such as average calculations

SQL Query & Aggregation

-- Sample SQL Query

SELECT CropName, PlantingDate, HarvestDate

FROM Crops

WHERE FarmID = 1;

-- Average Harvest Duration Calculation

SELECT AVG(DATEDIFF(DAY, PlantingDate, HarvestDate)) AS AvgHarvestDays

FROM Crops

WHERE FarmID = 1;

Structured query language (SQL) allows direct querying and aggregation, such as calculating averages

NoSQL Query & Aggregation

// JavaScript to query IndexedDB and calculate average harvest days

let db; // Assume `db` is an open IndexedDB instance

// Query to get data from IndexedDB

let transaction = db.transaction(['Crops'], 'readonly');

let objectStore = transaction.objectStore('Crops');

let request = objectStore.getAll();

request.onsuccess = function(event) {

let crops = event.target.result;

// Example of processing data to calculate average harvest days

let totalHarvestDays = crops.reduce((sum, crop) => {

let plantingDate = new Date(crop.PlantingDate);

let harvestDate = new Date(crop.HarvestDate);

let days = (harvestDate - plantingDate) / (1000 * 60 * 60 * 24);

return sum + days;

}, 0);

let avgHarvestDays = totalHarvestDays / crops.length;

console.log('Average Harvest Days:', avgHarvestDays);

};

NoSQL querying returns JSON data, which can be processed with JavaScript for aggregations such as average calculations

NoSQL JSON Structure and Schema Considerations

NoSQL JSON: Crops

{

"Farm:1": {

"Crops": [

{"CropID": "1", "CropName": "Wheat", "PlantingDate": "2024-03-15", "HarvestDate": "2024-08-10"}

]

},

"Farm:2": {

"Crops": [

{"CropID": "2", "CropName": "Corn", "PlantingDate": "2024-04-01", "HarvestDate": "2024-09-15"}

]

}

}

Flexible data structure using nested key-value pairs. The schema can vary between

documents

and might not be uniform

⚠️ Important: To ensure accurate processing and aggregation, it's

crucial

to know the schema of the JSON data. In NoSQL, since the schema is not enforced, you

must

understand and manage it at the application level to maintain consistency and

correctness in

data handling.

NoSQL JSON: Crops

{

"Farm:1": {

"Crops": [

{"CropID": "1", "CropName": "Wheat", "PlantingDate": "2024-03-15", "HarvestDate": "2024-08-10"}

]

},

"Farm:2": {

"Crops": [

{"CropID": "2", "CropName": "Corn", "PlantingDate": "2024-04-01", "HarvestDate": "2024-09-15"}

]

}

}

Flexible data structure using nested key-value pairs. The schema can vary between documents and might not be uniform

⚠️ Important: To ensure accurate processing and aggregation, it's crucial to know the schema of the JSON data. In NoSQL, since the schema is not enforced, you must understand and manage it at the application level to maintain consistency and correctness in data handling.

Evaluating NoSQL Data Modeling

Pros

- Flexible Schema: Allows for varying structures and rapid schema

changes

- Nested Data: Can model complex hierarchical relationships

within a

single document

- Efficient Reads: Reduces the need for joins by storing related

data

together

- Scalability: Easily scales horizontally to handle large volumes

of

data

Cons

- Complex Queries: Nested structures can make querying and data

manipulation more complex

- Data Duplication: May lead to data duplication if not managed

properly

- Performance Issues: Large and deeply nested documents can

impact

performance

- Inconsistent Structure: Variability in document structure can

lead

to data inconsistency

Pros

- Flexible Schema: Allows for varying structures and rapid schema changes

- Nested Data: Can model complex hierarchical relationships within a single document

- Efficient Reads: Reduces the need for joins by storing related data together

- Scalability: Easily scales horizontally to handle large volumes of data

Cons

- Complex Queries: Nested structures can make querying and data manipulation more complex

- Data Duplication: May lead to data duplication if not managed properly

- Performance Issues: Large and deeply nested documents can impact performance

- Inconsistent Structure: Variability in document structure can lead to data inconsistency

Case Study I - Precision Farming Database

Requirements Overview

- Sensor Data: Real-time, high volume. Data from IoT sensors monitoring soil moisture, temperature, and humidity, requiring efficient processing and storage

- Weather Data: Data from APIs. External weather data including rainfall, temperature, and wind speed, crucial for predictive analysis and decision-making

- Drone Imagery: High-resolution images & videos. Large files from drones used for crop health monitoring, demanding robust storage solutions

- Farm Management Data: Information on crop types, planting schedules, and irrigation plans, essential for managing daily operations

Which type of database (SQL or NoSQL/IndexedDB) would you recommend for each requirement, and why?

Today

- Data Modeling ✓

- Data Engineering

- Questions Assignment 1

- Glossary and Terminology

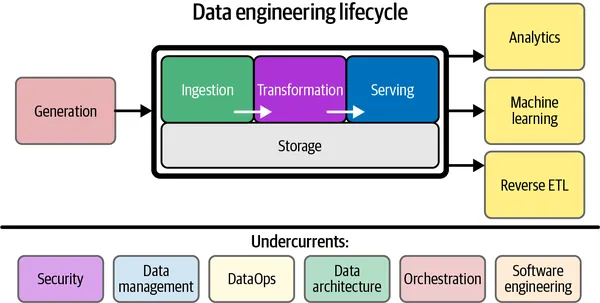

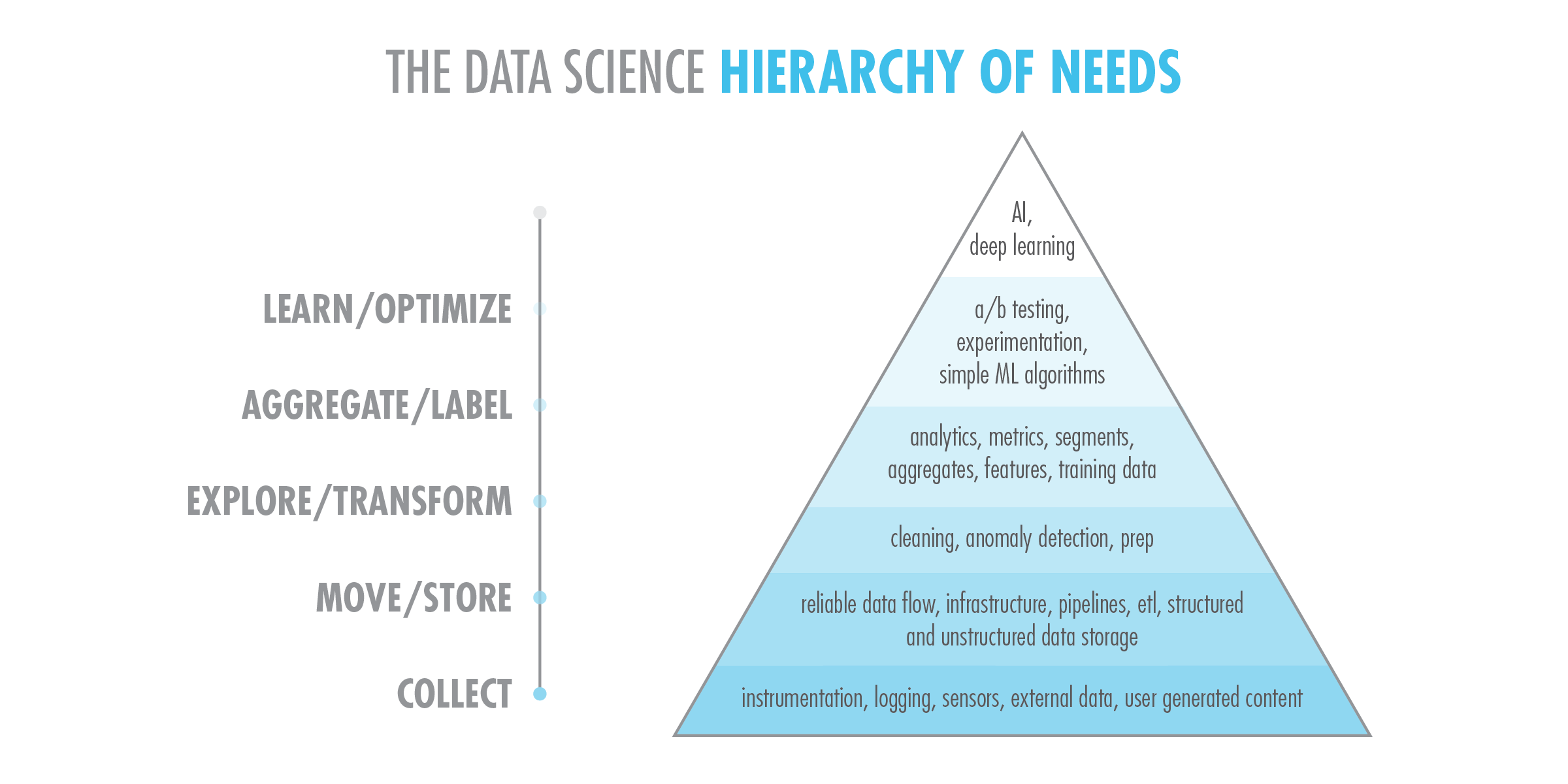

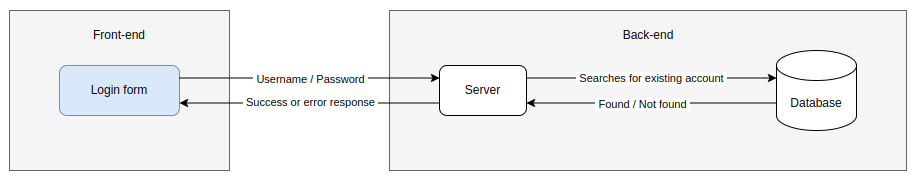

What is Data Engineering?

- Data Collection and Ingestion

- Data Transformation and Cleaning

- Data Storage and Management

- Data Pipeline Development

- Data Security and Governance

- Data Versioning

- Data Maintenance

- Data Archiving

Integration of Software Engineering with Data Engineering

How can principles of software engineering be integrated with data engineering practices to improve the reliability, scalability, and maintainability of data-driven systems in agriculture?

{

"field_id": "North_Section",

"average_soil_moisture": "30%",

"average_temperature": "19°C",

"average_humidity": "62%",

"time_period": "2024-09-01 to 2024-09-07"

}

At which stage of the data hierarchy does this aggregated data belong?

Today

- Data Modeling ✓

- Data Engineering ✓

- Questions Assignment 1

- Glossary and Terminology

How to store assets?

| Image Stored as URL | Image Stored as Blob |

|---|---|

http://example.com/image.jpg

|

47 49 46 38 39 61

(Example of binary data) |

How to store assets?

| Store Image by URL | Store Image as Blob |

|---|---|

|

|

Storing Images in IndexedDB

Auto-Incrementing Entries in IndexedDB

// Open IndexedDB and set up auto-increment

const request = indexedDB.open("LibraryDB", 1);

request.onupgradeneeded = function(e) {

let db = e.target.result;

if (!db.objectStoreNames.contains("books")) {

db.createObjectStore("books", { keyPath: "id", autoIncrement: true });

}

};

request.onsuccess = function(e) {

let db = e.target.result;

let tx = db.transaction("books", "readwrite");

let store = tx.objectStore("books");

store.add({ title: "Sample Book", author: "Author", timestamp: new Date() });

tx.oncomplete = () => console.log("Book added on refresh.");

};

request.onerror = function(e) {

console.error("Error", e.target.errorCode);

};

Each browser refresh adds a new book entry with auto-incremented ID

Limitated Aggregates in IndexedDB

- Data types can vary, complicating aggregates like sum or average

- Data is accessed primarily by keys, not suiting full data set scans

Today

- Data Modeling ✓

- Data Engineering ✓

- Questions Assignment 1 ✓

- Glossary and Terminology

Today

- Data Modeling ✓

- Data Engineering ✓

- Questions Assignment 1 ✓

- Glossary and Terminology ✓

Today

- Data Modeling Part 2

- Data Engineering Part 2

- Assignment Review

- Roster Verification

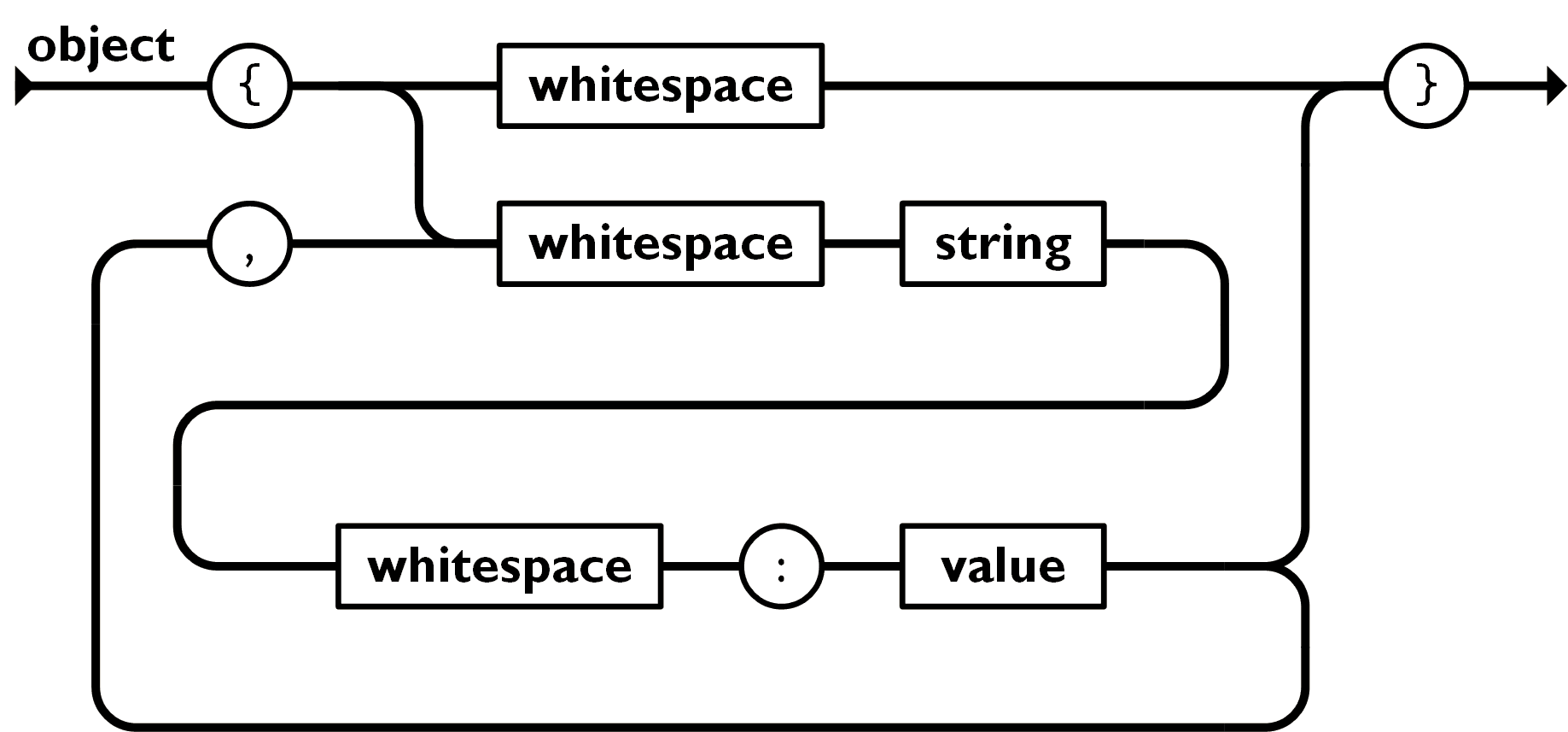

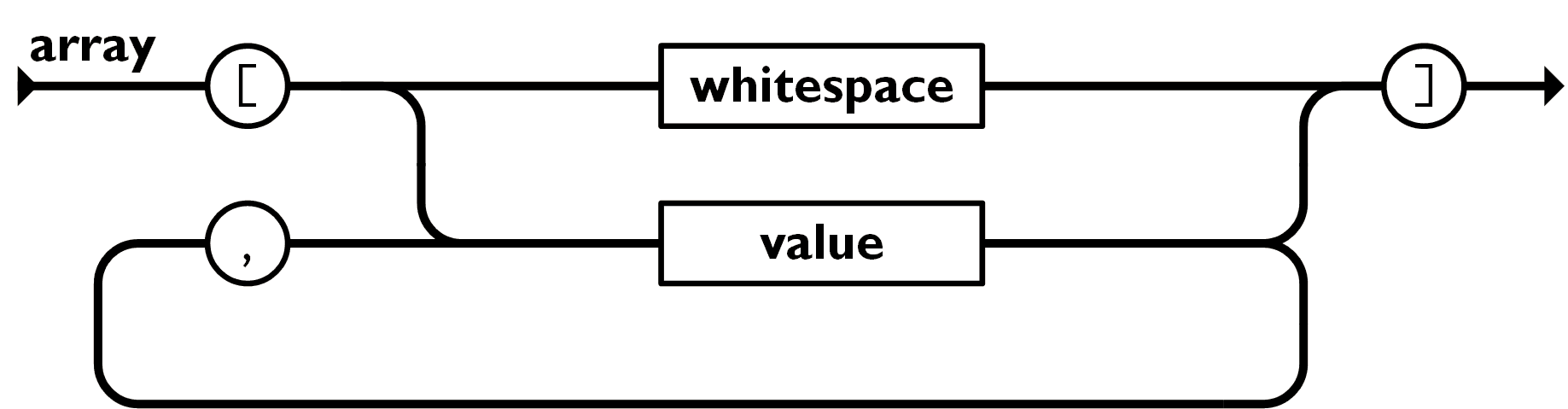

Modeling JSON Documents Data

- Is the JSON structure valid?

- Is the JSON schema validation in place? (next week)

- Are values validated correctly? (next week)

Is the JSON structure valid?

JSON Object Structure

{}

JSON Object Structure

{

"name1": "value1",

"name2": "value2"

}

Is the JSON structure valid?

JSON Array Structure

[

"value1",

"value2",

"value3"

]

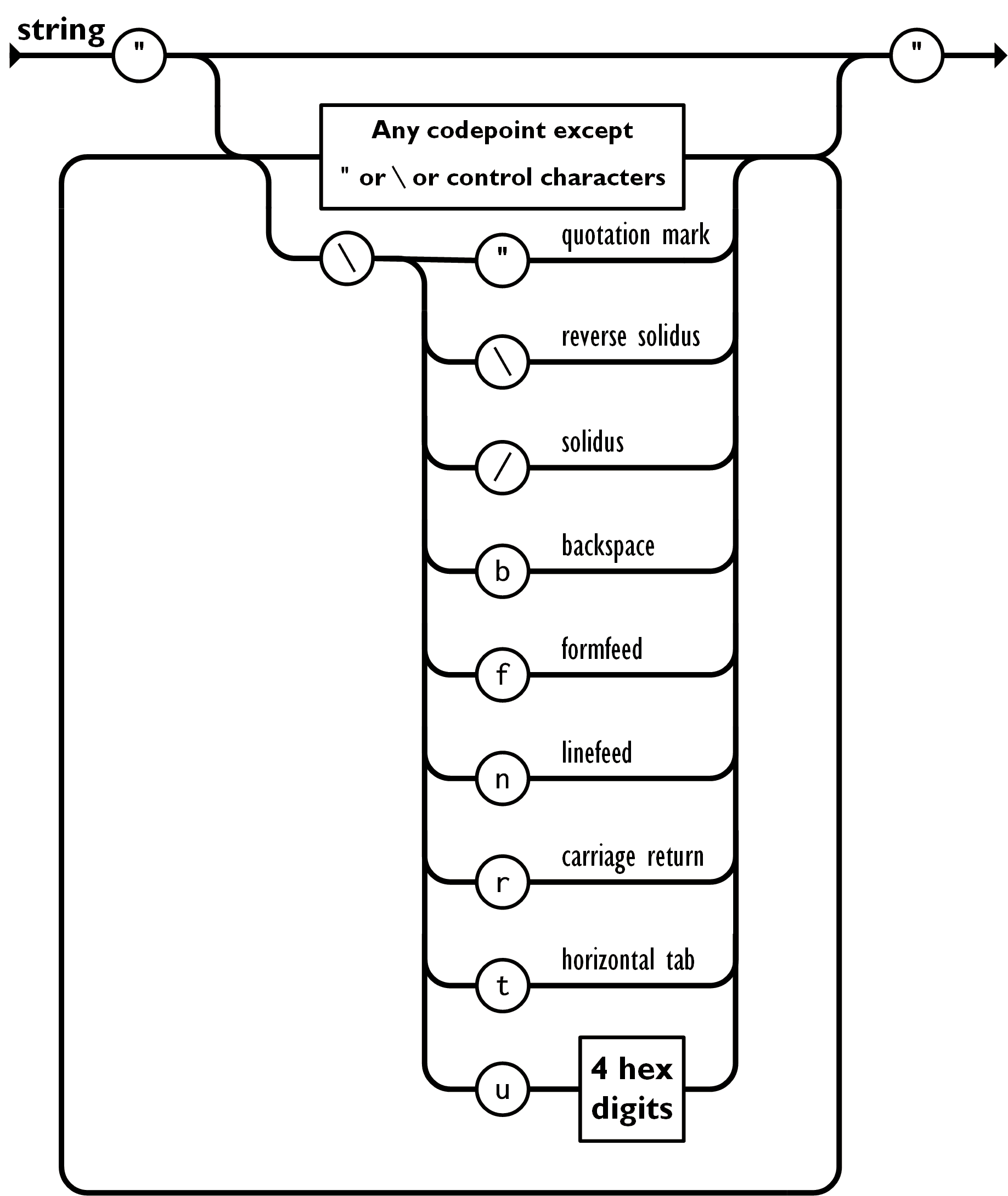

JSON String Structure

{

"name": "John Doe",

"greeting": "Hello, world",

"city": "Stockton",

"language": "JavaScript",

"quote": "He said, \"Hello!\"",

"hexExample": "\u0048\u0065\u006C\u006C\u006F", // "Hello" in hexadecimal

"withTab": "Line1\tLine2" // Tab between two lines

}

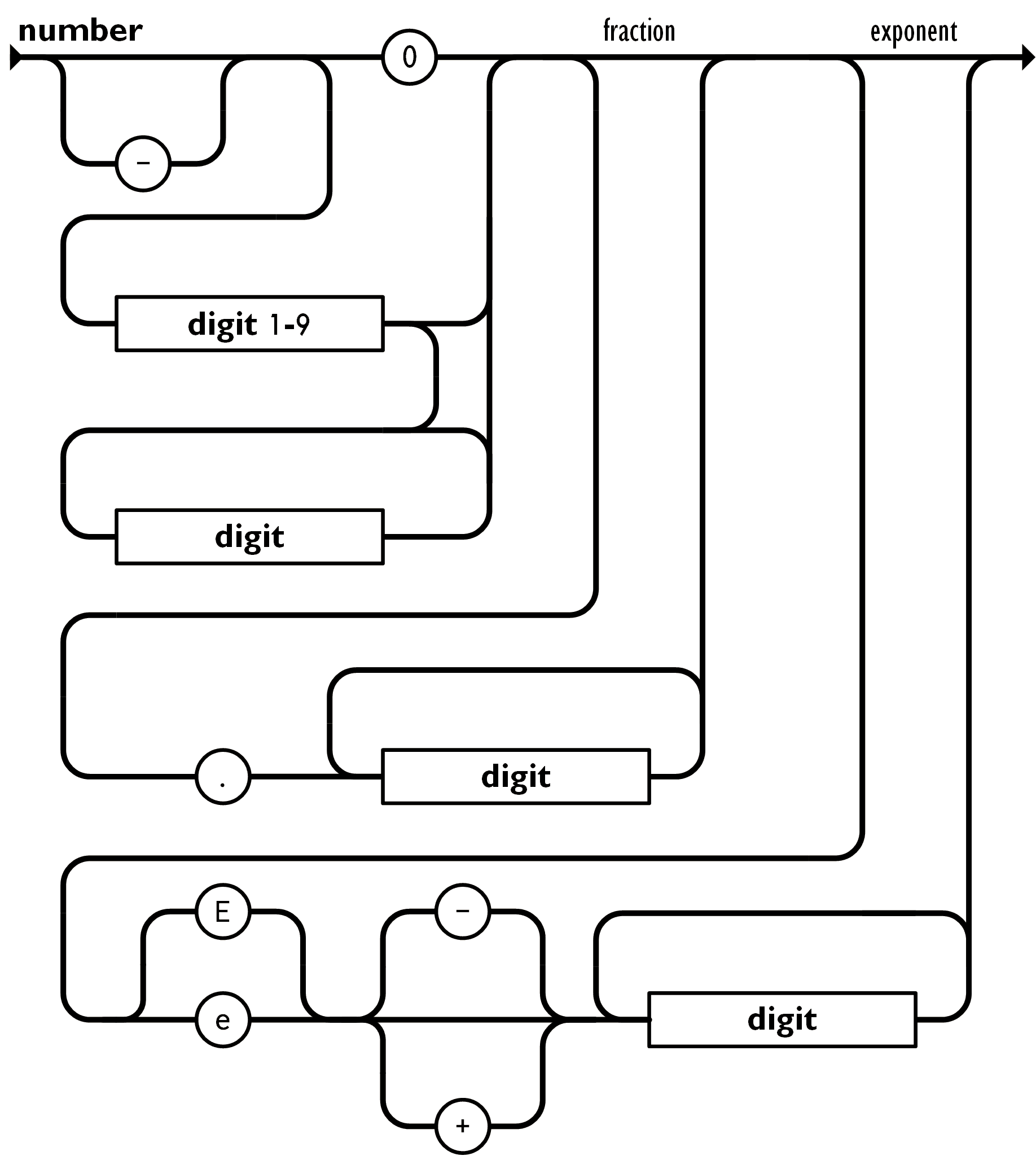

JSON Number Structure

{

"integer": 42,

"negative": -99,

"float": 3.14159,

"exponential": 1.23e4 // Equivalent to 12300

}How do we validate the data model is correctly structured?

Is this a valid JSON structure?

{

"name": "John",

"age": 30

"city": "New York"

}Is this a valid JSON structure?

{

"name": "Jane",

"age": 25,

"city": "Los Angeles",

}

Is this a valid JSON structure?

{

'name': 'Mike',

'age': 40,

'city': 'Chicago'

}How do we automatically validate the data model is correctly structured?

function isJSONValid(jsonString) {

try {

JSON.parse(jsonString);

return true;

} catch (e) {

return false;

}

}

const jsonString = '{"name": "John", "age": 30}';

if (isJSONValid(jsonString)) {

console.log("Valid JSON");

} else {

console.log("Invalid JSON");

}

Modeling JSON Documents Data

- Is the JSON structure valid?

- Is the JSON schema validation in place? (next week)

- Are values validated correctly? (next week)

How to Model Primary Key / ID?

Modeling JSON Object Keys Options

- Custom Key: Manually assign a meaningful key (e.g., "userID")

- Auto-Incrementing: Sequentially increasing number as a key

- Universally Unique Identifier (UUID): Generate a unique key

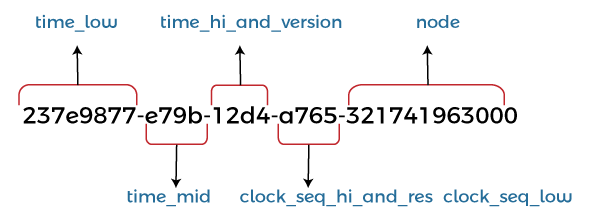

Modeling JSON Object Keys via UUID

UUIDs ensures unique identification / avoid collisions

function generateUUID() {

return crypto.randomUUID();

}

const jsonObject = {

[generateUUID()]: {

"name": "John Doe",

"age": 30

}

};

How UUIDs Are Generated?

- Random numbers

- Timestamps

- Unique identifiers (e.g., MAC address)

UUID (Universally Unique Identifier) is a 128-bit number generated. UUIDs are designed to be globally unique across space and time, with a very low probability of duplication

Agriculture JSON Object Example

{

"uuid": "3f5e1fbe-9c4f-4d7f-b8fa-9b5aef02e573",

"crop": "Wheat",

"farmLocation": "North Field",

"yield": 3000,

"unit": "kg",

"harvestDate": "2024-09-04"

}

Case Study II - Library Management System

Requirements Overview

- Book Inventory: Cataloging information including ISBN, title, author, genre, and availability status, crucial for managing book lending and returns

- Member Records: Detailed information on library members, such as name, contact details, membership type, and borrowing history, requiring secure and organized storage

- Transaction Logs: High volume. Records of book checkouts, returns, and fines, which need to be tracked efficiently over time for auditing purposes

- Digital Media: Storage of e-books, audiobooks, and digital magazines, requiring flexible storage solutions to handle various formats and sizes.

Which type of database (SQL or NoSQL/IndexedDB) would you recommend for each requirement, and why?

Today

- Data Modeling Part 2 ✓

- Data Engineering Part 2

- Assignment Review

- Roster Verification

When verifying data model?

Data Model Verification

Always verify the data model before reading or writing to:

- Ensure consistency

- Maintain data integrity

- Prevent errors

What is tranformation?

Map - Filter - Reduce

Library: Map Example

Map: Convert the list of books to show only titles

const books = [

{ uuid: "a1b2c3d4-5678-9abc-def0-1234567890ab", title: "Book A", author: "Author 1", pages: 200, isbn: "978-3-16-148410-0" },

{ uuid: "e5f6g7h8-9101-1jkl-mnop-0987654321cd", title: "Book B", author: "Author 2", pages: 150, isbn: "978-1-23-456789-7" },

{ uuid: "i9j1k2l3-qrst-4567-uvwx-2345678901yz", title: "Book C", author: "Author 3", pages: 300, isbn: "978-0-12-345678-9" }

];

const titles = books.map(book => book.title);

console.log(titles); // ["Book A", "Book B", "Book C"]

Library: Filter Example

Filter: Show books with more than 200 pages

const books = [

{ uuid: "a1b2c3d4-5678-9abc-def0-1234567890ab", title: "Book A", author: "Author 1", pages: 200, isbn: "978-3-16-148410-0" },

{ uuid: "e5f6g7h8-9101-1jkl-mnop-0987654321cd", title: "Book B", author: "Author 2", pages: 150, isbn: "978-1-23-456789-7" },

{ uuid: "i9j1k2l3-qrst-4567-uvwx-2345678901yz", title: "Book C", author: "Author 3", pages: 300, isbn: "978-0-12-345678-9" }

];

const largeBooks = books.filter(book => book.pages > 200);

console.log(largeBooks);

// [{ uuid: "i9j1k2l3-qrst-4567-uvwx-2345678901yz", title: "Book C", author: "Author 3", pages: 300, isbn: "978-0-12-345678-9" }]

Library: Reduce Example

Reduce: Calculate the total number of pages

const books = [

{ uuid: "a1b2c3d4-5678-9abc-def0-1234567890ab", title: "Book A", author: "Author 1", pages: 200, isbn: "978-3-16-148410-0", stock: "Available" },

{ uuid: "e5f6g7h8-9101-1jkl-mnop-0987654321cd", title: "Book B", author: "Author 2", pages: 150, isbn: "978-1-23-456789-7", stock: "Out of Stock" },

{ uuid: "i9j1k2l3-qrst-4567-uvwx-2345678901yz", title: "Book C", author: "Author 3", pages: 300, isbn: "978-0-12-345678-9", stock: "Available" }

];

const totalPages = books.reduce((sum, book) => sum + book.pages, 0);

console.log(totalPages); // 650

Review Data Modeling and Engineering

Today

- Data Modeling Part 2 ✓

- Data Engineering Part 2 ✓

- Assignment Review

- Roster Verification

Arrange: Set Up the Test Data

// Arrange

const sensorId = 'sensor123';

const expected = {

id: 'sensor123',

temperature: 24.5,

humidity: 78,

soilMoisture: 30

};

Act: Execute the Function

// Act

const result = getSensorData(sensorId);

Assert: Verify the Results

// Assert

console.assert(result.id === sensorId,

'Test failed: Sensor ID does not match.');

console.assert(result.temperature >= 0 && result.temperature <= 50,

'Test failed: Temperature is out of the range (0-50°C).');

console.assert(result.humidity >= 0 && result.humidity <= 100,

'Test failed: Humidity is out of the range (0-100%).');

console.assert(result.soilMoisture >= 0 && result.soilMoisture <= 100,

'Test failed: Soil moisture is out of the range (0-100%).');

Today

- Data Modeling Part 2 ✓

- Data Engineering Part 2 ✓

- Assignment Review ✓

- Roster Verification

Reminder

Please review the syllabus, homework, lab, and reading list

Data Quality and Standards

Today

- Why Data Quality?

- Definition of Data Quality

- Implementation of Data Quality

- Introduction MongoDB

- Project Part 1, Homework 2, Lab 2

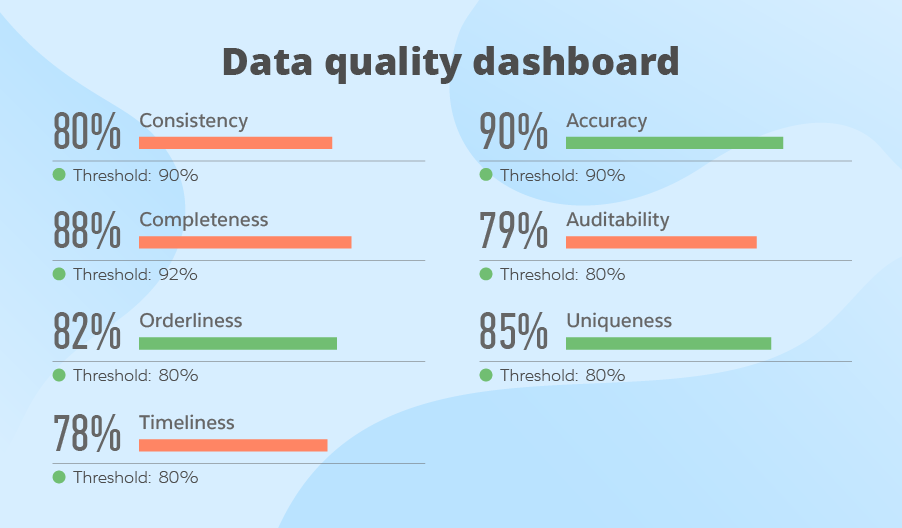

Definition of Data Quality

- Integrity: Data maintains accuracy and consistency

- Validity: Data adheres to established formats and rules

- Consistency: Data is consistent across datasets

- Accuracy: Data correctly reflects real-world conditions

- Completeness: All necessary data is included; essential for analysis

- Timeliness: Data is available when needed; current conditions

- Uniqueness: No unnecessary duplicates; each entry is distinct

Definition of Data Quality

- Healthcare: Compliance with HIPAA for data privacy, accuracy in patient records

- Automotive: Compliance with ISO/TS 16949 for quality and safety standards

- Manufacturing: Conformity with ISO 9001 for quality management systems

- Retail: Compliance with PCI DSS for payment data security, accuracy in inventory data

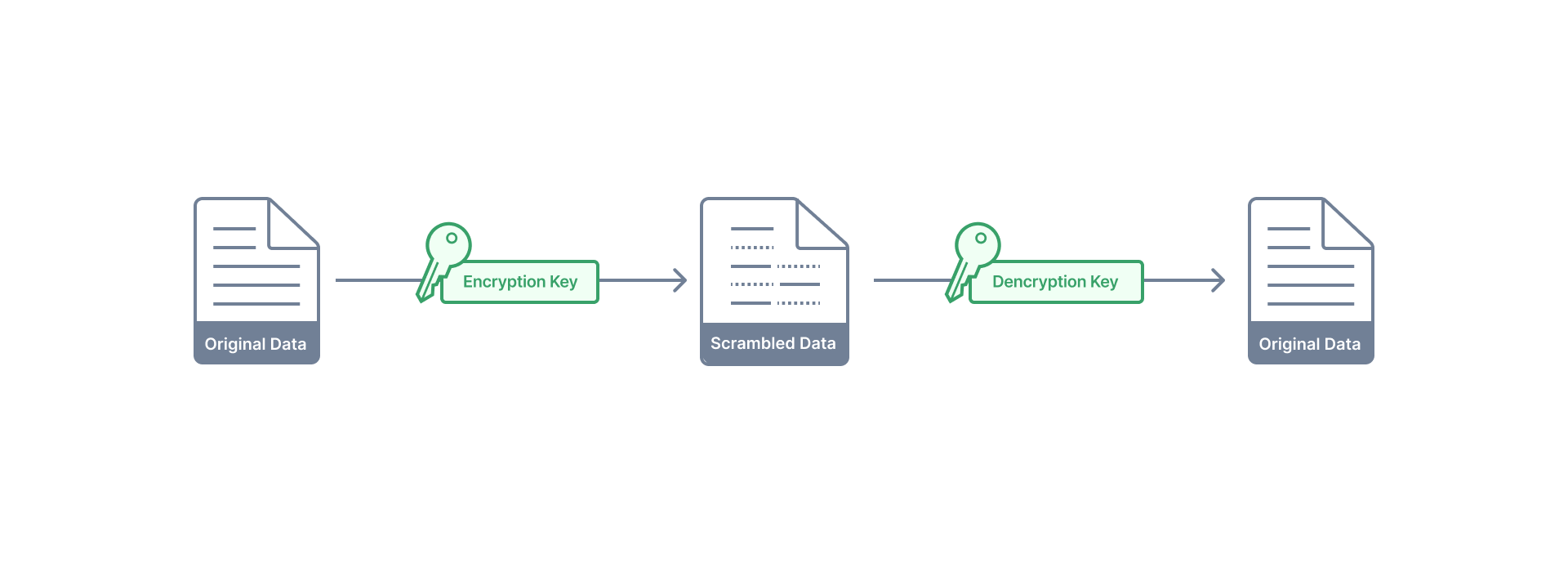

Implementing Data Integrity

Data Tampering

Data tampering involves unauthorized alterations or manipulations of data, potentially compromising its integrity during transmission or storage.| Original JSON | Tampered JSON |

|---|---|

|

|

Verification Data Integrity

{

"patientRecord": {

"uuid": "123e4567-e89b-12d3-a456-426614174000",

"clinicalRecord": {

"patientName": "John Doe",

"diagnosisCode": "E11.9",

},

"metadata": {

"patientRecordChecksum": "79e1ed3dc3250d3a9cc80f9ab...",

"author": "Dr. Jane Smith",

}

}

}async function createChecksum(data) {

const dataAsBytes = new TextEncoder().encode(data);

const hashBuffer = await crypto.subtle.digest('SHA-256', dataAsBytes);

const hashHex = Array.from(new Uint8Array(hashBuffer))

.map(byte => byte.toString(16).padStart(2, '0'))

.join('');

return hashHex;

}

// Example usage:

createChecksum('patientRecord').then(checksum => console.log('Checksum:', checksum));import hashlib

def create_checksum(data):

# Convert data to bytes

data_as_bytes = data.encode('utf-8')

# Create SHA-256 hash

hash_object = hashlib.sha256(data_as_bytes)

# Convert hash to hexadecimal format

hash_hex = hash_object.hexdigest()

return hash_hex

# Example usage

checksum = create_checksum('patientRecord')

print('Checksum:', checksum)

What if data is lost in transit?

HTTPS, Bluetooth, Wi-Fi (802.11), Ethernet (IEEE 802.3), Cellular Networks (3G, 4G, 5G), Satellite CommunicationEarly Build Data Quality Visibility

Definition of Data Quality

- Integrity: Data maintains accuracy and consistency ✓

- Validity: Data adheres to established formats and rules

- Consistency: Data is consistent across datasets

- Accuracy: Data correctly reflects real-world conditions

- Completeness: All necessary data is included; essential for analysis

- Timeliness: Data is available when needed; current conditions

- Uniqueness: No unnecessary duplicates; each entry is distinct

Verify Valid Format, Range, Type, and Value

{

"patientRecord": {

"uuid": "123e4567-e89b-12d3...", // Format: UUID

"clinicalRecord": {

"patientName": "John Doe", // Type: String, Value: Non-empty

"diagnosisCode": "E11.9" // Format: ICD-10 Code, Value: Valid code

},

"metadata": {

"createdTimestamp": "2024-09-08T12:34:56Z", // Format: ISO 8601, Value: Valid timestamp

"patientRecordChecksum": "79e1ed3dc3250...", // Type: String (SHA-256), Value: Correct hash

"author": "Dr. Jane Smith" // Type: String, Value: Valid name

}

}

}

SQL Database

What mechanism does SQL offer to achieve data integrity?Format and Type Validation

- Format Validation:

- UUID: Use regular expressions to ensure it follows the UUID format

- Timestamp: Use regular expressions to verify ISO 8601 format

- Type Validation:

- Name: Ensure it's a string and non-empty

- Diagnosis Code: Verify that it's a valid code

- Author: Check that it's a string and non-empty

Range and Value Validation

- Range Validation:

- Temperature: Verify the value falls within a realistic range, e.g., -50°C to 50°C.

- Date: Check that the date is within a valid range, e.g., not in the future

- Value Validation:

- Diagnosis Code: Ensure it is a valid and recognized code (e.g., ICD-10)

- Status: Check if the status is one of the allowed values (e.g., "active", "inactive")

- Quantity: Verify that the value is a positive number and meets expected criteria (e.g., greater than 0)

Definition of Data Quality

- Integrity: Data maintains accuracy and consistency ✓

- Validity: Data adheres to established formats and rules ✓

- Consistency: Data is consistent across datasets

- Accuracy: Data correctly reflects real-world conditions

- Completeness: All necessary data is included; essential for analysis

- Timeliness: Data is available when needed; current conditions

- Uniqueness: No unnecessary duplicates; each entry is distinct

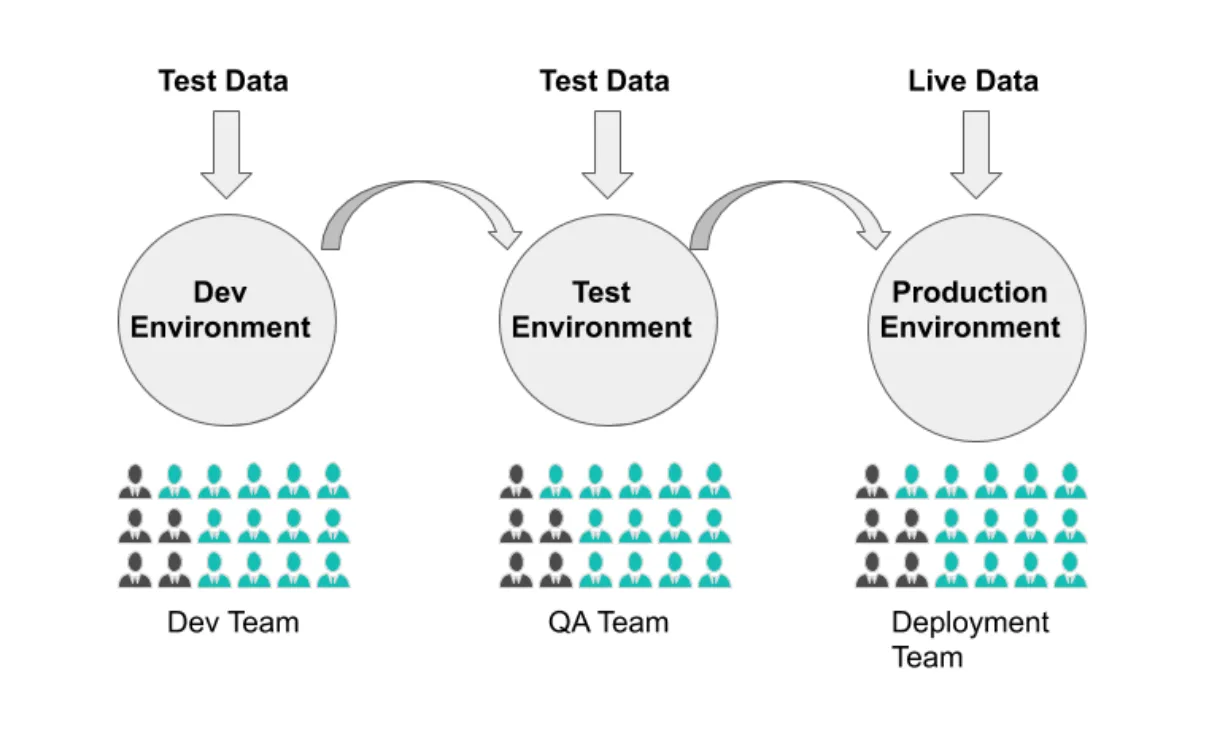

Often, 5% of live data is used for development and testing purposes. What do you recommend?

When would You recommend to hire a data quality engineer?

Case Study: Bike Store Data Quality

Data Quality Requirements Overview

- Accuracy: Ensure product descriptions, pricing, and inventory levels are correct to prevent sales issues and customer dissatisfaction

- Completeness: All product listings should have complete information including specifications, availability, and compatible accessories

- Consistency: Data should be consistent across all platforms (online store, in-shop digital systems, mobile apps) to prevent confusion and errors

- Timeliness: Stock updates and promotional information should be updated in real-time to reflect current availability and offers

- Reliability: Order history and customer data must be reliable for effective CRM and marketing strategies

What strategies and technologies would you recommend to meet these data quality requirements?

Reading Material

Today

- Why Data Quality? ✓

- Definition of Data Quality ✓

- Implementation of Data Quality ✓

- Introduction MongoDB

- Project Part 1, Homework 2, Lab 2

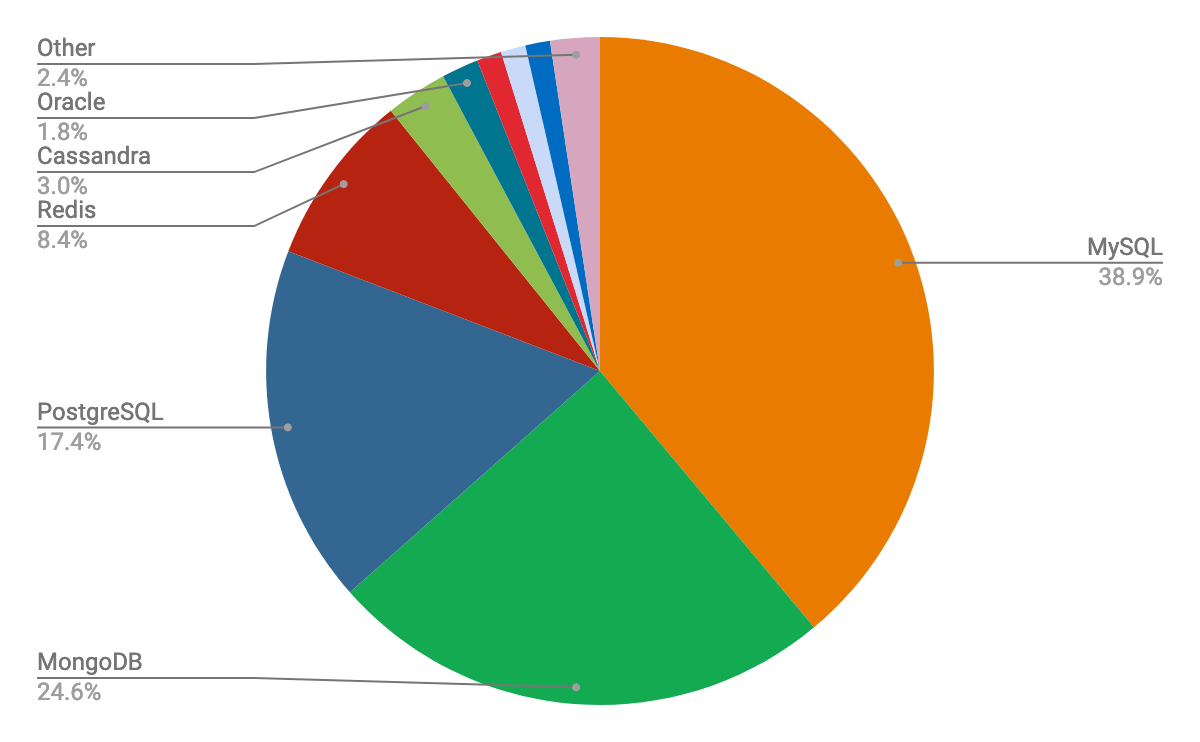

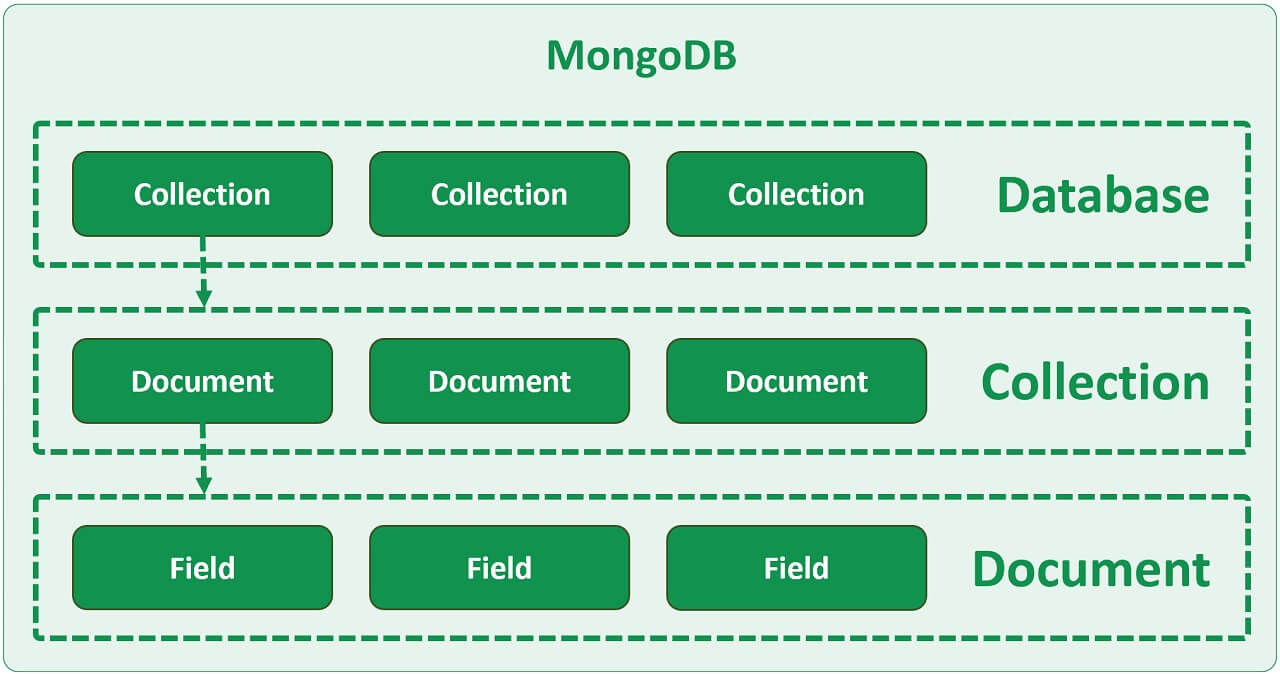

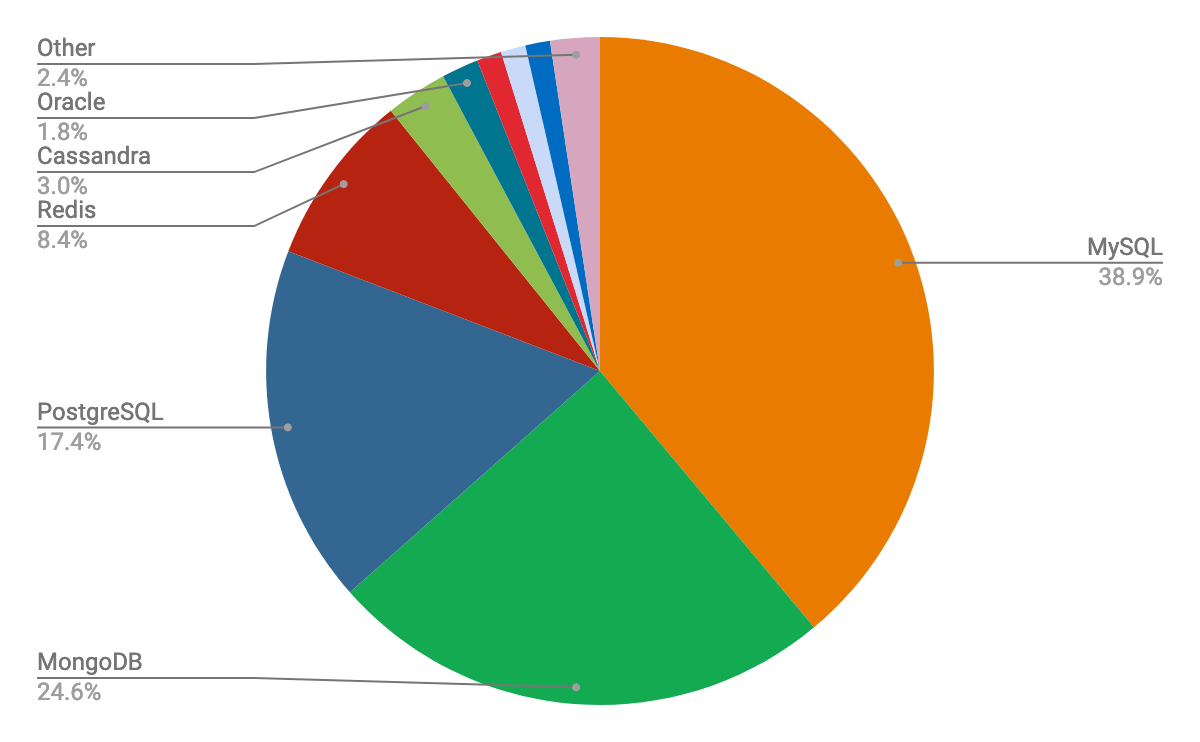

Introduction MongoDB

Reference (2020): MongoDB: Consistent Enterprise Market Share Gains

- 2007: MongoDB development begins at 10gen

- 2009: Released as open-source document database

- 2010: Commercial support options introduced

- 2013: 10gen rebrands to MongoDB, Inc

- 2014: WiredTiger storage engine introduced

- 2016: Launch of MongoDB Atlas cloud service

- 2017: MongoDB goes public on NASDAQ

- 2019: Release of MongoDB 4.2 with advanced features

- 2021: Introduction of MongoDB 5.0, expanding use cases

- Document-Oriented: JSON-like document storage

- Dynamic Schemas: No predefined schema required

- Scalability: Easy scaling through sharding

- Replication: Automatic replication for high availability

- Indexing: Comprehensive indexing options

- Aggregation Framework: In-database data processing

- Ad Hoc Queries: Supports rich query operations

- Storage Engines: Multiple options like WiredTiger

- ACID Transactions: Multi-document transaction support

Database Terminology

| Concept | MongoDB | IndexedDB | SQL |

|---|---|---|---|

| Table/Collection | Collection | Object Store | Table |

| Row/Record | Document | Record | Row |

| Column/Field | Field | Key/Value | Column |

| Query/Search | Query | Transaction/Request | Query |

Create Collection in MongoDB

To create a collection named todos:

db.createCollection("todos")Create Operation in MongoDB

Insert a new document into the todos collection:

db.todos.insert({ task: "Learn MongoDB", status: "In Progress" })Read Operation in MongoDB

Find documents with status "In Progress" in the todos collection:

db.todos.find({ status: "In Progress" })Update Operation in MongoDB

Update a document in the todos collection:

db.todos.update({ task: "Learn MongoDB" }, { $set: { status: "Completed" } })Delete Operation in MongoDB

Remove a document from the todos collection:

db.todos.remove({ task: "Learn MongoDB" })Case Study: Travel Agency Database

Requirements Overview

- Booking Data: High transaction rates with reservations, cancellations, and updates

- Customer Profiles: Rich customer data including preferences, booking history, and loyalty program information

- Travel Inventory: Diverse data from multiple sources, including flights, hotels, and tours, requiring flexible schema and fast querying

- Multi-User Access: Access control to manage different levels of user permissions for staff and management

Which type of database (SQL, IndexedDB, or MongoDB) would you recommend for each requirement, and why?

Please Install MongoDB

Today

- Why Data Quality? ✓

- Definition of Data Quality ✓

- Implementation of Data Quality ✓

- Introduction MongoDB ✓

- Project Part 1, Homework 2, Lab 2

Project Part 1: Motivation

Simulate working with large data sets to explore performance optimization in NoSQL databases. Techniques include read-only flags, indexing, and dedicated object stores

Using a "Todo List" domain, this project improves data query performance and teamwork skills. Alternate domains may be used, provided they maintain the same 'todo' object structure

Project Part 1: Implementation

- Create "TodoList" IndexedDB store with 100,000 objects; display on the browser

- Assign 'completed' status to 1,000 objects, others to 'in progress'

- Time and display the reading of all 'completed' status objects

- Apply a read-only flag, remeasure read time for 'completed' tasks

- Index the 'status' field, time read operations for 'completed' tasks

- Establish "TodoListCompleted" store, move all completed tasks, and time reads

Documentation at MDN Web Docs

Project Part 1: Delivery

- List team members with IDs

- Provide screenshots for tasks 1 to 6

- Share lessons and insights from the project

- Include screenshot of GitHub branch submission (e.g., project_1_team_1)

Upload one PDF with all project details (e.g., project_1_team_1.pdf)

Project Publishing Opportunity

International Conference on Emerging Data and Industry (EDI40)

Lab 2

- Data Modeling

- Data Transformation

- Data Integrity Verification

- Data Validity Verification

Homework 2

- IndexedDB Performance

- IndexedDB Aggregation

- IndexedDB ACID

- Weather Data Requirements

Today

- Why Data Quality? ✓

- Definition of Data Quality ✓

- Implementation of Data Quality ✓

- Introduction MongoDB ✓

- Project Part 1, Homework 2, Lab 2 ✓

Today

- Syllabus Update

- Definition of Data Quality

- Implementation of Data Quality

Syllabus Update

TA Chentankumar Patil (c_patil@u.pacific.edu)

TA Suhasi Miteshkumar Daftary (s_daftary@u.pacific.edu)

Definition of Data Quality

- Integrity: Data maintains accuracy and consistency ✓

- Validity: Data adheres to established formats and rules ✓

- Consistency: Data is consistent across datasets

- Accuracy: Data correctly reflects real-world conditions

- Completeness: All necessary data is included; essential for analysis

- Timeliness: Data is available when needed; current conditions

- Uniqueness: No unnecessary duplicates; each entry is distinct

Data Quality and Consistency

Data is uniform across the database(s), collection(s), and document(s)How to Maintain Data Consistency?

One database, collection, document, field per value

realistic for distributed databases?

CAP Theorem 🤔

Data Quality and Accuracy

Data correctly reflects real-world valuesHow to Maintain Data Accuracy?

Validate data at entry

Regular audits

Monitor dashboard

Automation

Data Quality and Completeness

Data is comprehensive and includes all information

How to Maintain Data Completeness?

Mandatory fields are filled

Regular audits

Monitor dashboards

Automation

Data Quality and Timeliness

Data is up-to-date and available when needed

CAP Theorem 🤔

How to Maintain Data Timeliness?

Distribute data

Real-time updates

Set data refresh schedules

Monitor for delays

Automate data pipelines

Data Quality and Uniqueness

Each record is distinct, with no duplicates

How to Maintain Data Uniqueness?

Unique constraints

Monitor for duplicates

Automate data checks

Definition of Data Quality

- Integrity: Data maintains accuracy and consistency ✓

- Validity: Data adheres to established formats and rules ✓

- Consistency: Data is consistent across datasets ✓

- Accuracy: Data correctly reflects real-world conditions ✓

- Completeness: All necessary data is included; essential for analysis ✓

- Timeliness: Data is available when needed; current conditions ✓

- Uniqueness: No unnecessary duplicates; each entry is distinct ✓

Today

- Syllabus Update ✓

- Definition of Data Quality ✓

- Implementation of Data Quality

Please start the computer / browser

Flower Store

- Name: The flower's common name

- Color: The primary color of the flower

- Price: The selling price per stem or bunch

- Description: Optional details about the flower

Please recommend a data model

Please start MongoDB

Create Database

use flowerDatabaseCreate Collection

db.createCollection("flowerCollection")CRUD Operators

| Operation | Command | Description |

|---|---|---|

| Create | db.collection.insertOne(document) |

Inserts a single document into the collection |

| Create | db.collection.insertMany([document1, document2, ...]) |

Inserts multiple documents into the collection |

| Read | db.collection.find(query) |

Finds documents that match the query |

| Read | db.collection.findOne(query) |

Finds a single document that matches the query |

| Update | db.collection.updateOne(filter, update) |

Updates a single document that matches the filter |

| Update | db.collection.updateMany(filter, update) |

Updates multiple documents that match the filter |

| Delete | db.collection.deleteOne(filter) |

Deletes a single document that matches the filter |

| Delete | db.collection.deleteMany(filter) |

Deletes multiple documents that match the filter |

Add one Flower

db.flowerCollection.insertOne({

name: "Orchid",

color: "Purple",

price: 4.0,

stock: 25

});

Add many Flowers

db.flowerCollection.insertMany([

{ name: "Rose", color: "Red", price: 2.5, stock: 50 },

{ name: "Tulip", color: "Yellow", price: 1.5, stock: 30 },

{ name: "Lily", color: "White", price: 3.0, stock: 20 },

{ name: "Daisy", color: "Pink", price: 1.2, stock: 40 }

]);

Data Quality and Integrity

[

{ name: "Daisy", color: "Pink", price: 1.2, stock: 40 }

];

Flower Store

- Name: The flower's common name

- Color: The primary color of the flower

- Price: The selling price per stem or bunch

- Description: Optional details about the flower

Set Default Value

db.flowersCollection.updateMany(

{ $or: [

{ description: { $exists: false } },

{ description: { $eq: undefined } }

]

},

{ $set: { description: "unset" } }

);

db.flowersCollection.find({});Comparison Operators

| Operator | Description | Use Case |

|---|---|---|

$gt |

Matches values greater than a specified value | Find documents where a field's value is greater than a given value |

$lt |

Matches values less than a specified value | Find documents where a field's value is less than a given value |

$in |

Matches any of the values in an array | Find documents where a field contains any value from a list |

$ne |

Matches values not equal to a specified value | Find documents where a field is not equal to a given value |

$exists |

Checks for the existence of a field | Find documents where a field either exists or does not |

How to find all flowers that cost more than $20 in MongoDB?

db.flowersCollection.find({ price: { $gt: 20 } });How to find the first flower that cost more than $20 in MongoDb?

Logical Operators

| Operator | Description | Use Case |

|---|---|---|

$or |

At least one condition must be true | Find documents where either condition1 or condition2 is true |

$and |

All conditions must be true | Find documents where both condition1 and condition2 are true |

$not |

Inverts the effect of a query expression | Find documents that do not match a specific condition |

$nor |

None of the conditions must be true | Find documents where none of the specified conditions are true |

How to find the first flower that cost more than $20 and has at least 5 available in MongoDb?

Evaluation Operators

| Operator | Description | Use Case |

|---|---|---|

$regex |

Pattern matching using regular expressions | Find documents where a field matches a regex pattern |

$size |

Matches arrays with the specified number of elements | Find documents where an array field has a specific size |

$type |

Matches documents where a field is a specific type | Find documents where a field is of a certain type (e.g., string, number) |

$expr |

Allows aggregation expressions within the query | Find documents by evaluating complex expressions |

$mod |

Performs modulo operation on the value of a field | Find documents where a field value, divided by a divisor, has a specific remainder. |

MongoDB Cursor Methods

| Method | Command | Description |

|---|---|---|

| Limit | db.collection.find().limit(n) |

Limits the number of documents returned by the query to n |

| Sort | db.collection.find().sort({ field: 1 }) |

Sorts the documents by the specified field. Use 1 for

ascending and -1 for descending order |

| Skip | db.collection.find().skip(n) |

Skips the first n documents of the query results |

| Count | db.collection.countDocuments(query) |

Returns the number of documents that match the query criteria |

| Distinct | db.collection.distinct(field) |

Returns an array of distinct values for the specified field in the

query results |

| ForEach | db.collection.find().forEach(callback) |

Iterates over each document in the cursor and executes the provided

callback function.

|

| Map | db.collection.find().map(callback) |

Transforms the documents in the cursor using the provided callback

function and returns an array of the transformed documents |

| Next | db.collection.find().next() |

Returns the next document in the cursor |

- Find all flowers that have a price greater than $15

- Find all flowers that are either red or yellow

- Find flowers that are out of stock (stock = 0)

- Find the cheapest flower in the store

- Update the stock of all tulips by adding 10 units

- Delete all flowers that have a price less than $5

- Find all flowers that have a price between $10 and $20

- List all flowers sorted by price in descending order

- Add a "description" field to all flowers, setting it to "Beautiful flower."

- Find flowers that are not pink in color

Reading Material

Questions on Data Quality?

- How would you detect and remove duplicate entries in a dataset?

- What steps would you take to handle missing data in key fields?

- How would you implement validation checks for data accuracy at the point of entry?

- How can you ensure that data types are consistent across your dataset?

- What tools would you use to perform regular data audits for quality control?

- How would you handle outdated data in a system requiring real-time updates?

- How can you automate data cleaning to maintain quality over time?

- What method would you use to maintain referential integrity between related datasets?

- How would you assess the completeness of data for a reporting task?

- What techniques would you use to ensure data quality during migration between systems?

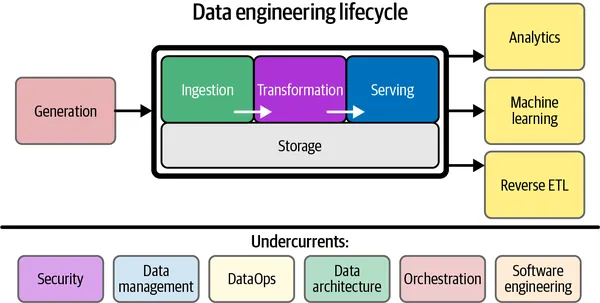

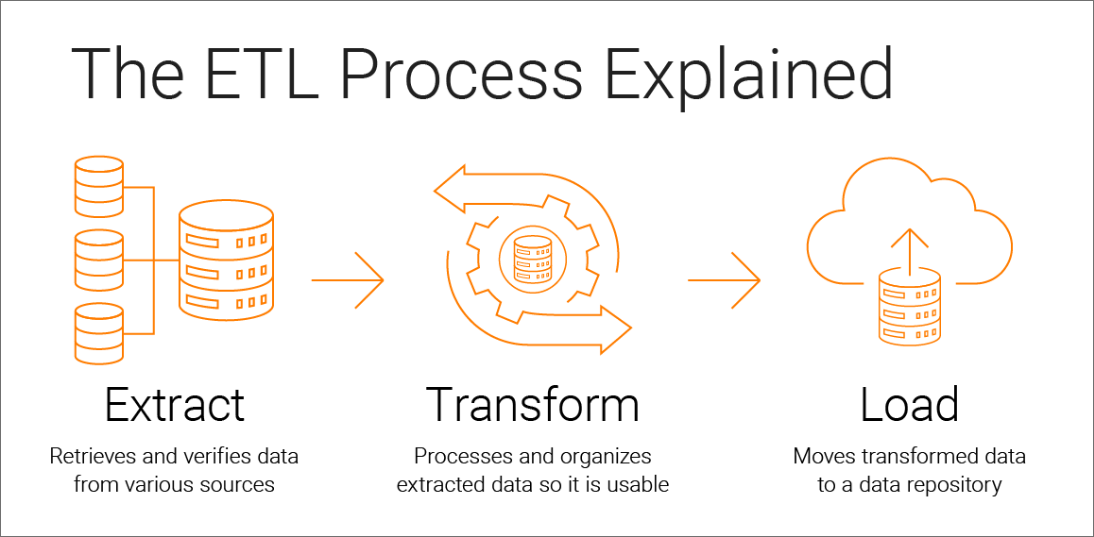

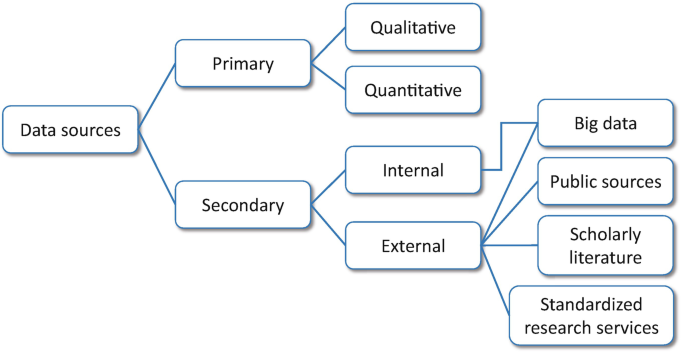

Data Ingestion and Processing

This week

- Practice NoSQL Queries

- Why Data?

- Ingestion (Extract)

- Processing (Transform)

- Loading (Load)

IndexedDB: Query 1

How to open a connection to an IndexedDB database called "BookStore"?

const request = indexedDB.open("BookStore", 1);

request.onerror = function(event) {

console.log("Error opening database");

};

request.onsuccess = function(event) {

const db = event.target.result;

console.log("Database opened successfully");

};

IndexedDB: Query 2

How can you create an object store for "Books" with "bookId" as the key?

request.onupgradeneeded = function(event) {

const db = event.target.result;

const objectStore = db.createObjectStore("Books", { keyPath: "bookId" });

console.log("Object store 'Books' created");

};

IndexedDB: Query 3

How to add a new book to the "Books" object store?

const transaction = db.transaction(["Books"], "readwrite");

const objectStore = transaction.objectStore("Books");

const request = objectStore.add({ bookId: 1, title: "...", author: "..." });

request.onsuccess = function(event) {

console.log("Book added successfully");

};

How do you create a database in MongoDB called "BookStore"?

use BookStore;

MongoDB: Query 2

How can you create a collection called "Books" in the "BookStore" database?

db.createCollection("Books");

MongoDB: Query 3

How do you insert a new book into the "Books" collection?

db.Books.insertOne({

title: "New Book",

author: "Author X",

price: 19.99,

copiesSold: 500

});

This week

- Practice NoSQL Queries ✓

- Why Data?

- Ingestion (Extract)

- Processing (Transform)

- Loading (Load)

Why Data? To Make Better Decisions

- ✓ Business Decisions

- ✓ Maintenance Decisions

- ✓ Operational Decisions

- ✓ Strategic Decisions

- ✓ Resource Allocation

Decisions

- ✓ Marketing Decisions

- ✓ Risk Management Decisions

- ✓ Product Development

Decisions

- ✓ Customer Satisfaction

Decisions

- ✓ Business Decisions

- ✓ Maintenance Decisions

- ✓ Operational Decisions

- ✓ Strategic Decisions

- ✓ Resource Allocation Decisions

- ✓ Marketing Decisions

- ✓ Risk Management Decisions

- ✓ Product Development Decisions

- ✓ Customer Satisfaction Decisions

We Collect Data to Answer Questions

Key Insight: Data is collected to provide

insights

and solutions

But first, we need to ask

the right questions.

Without a clear question, the data may lack purpose or

direction.

Key Insight: Data is collected to provide insights and solutions

But first, we need to ask the right questions.

Without a clear question, the data may lack purpose or direction.

When Should We Perform Equipment Maintenance?

Data Insights:

-

✓ Monitor sensor data from equipment.

-

✓ Analyze historical maintenance

records.

-

✓ Use predictive maintenance algorithms.

Data-driven decision: Schedule maintenance proactively to prevent

costly

breakdowns and downtime.

Data Insights:

- ✓ Monitor sensor data from equipment.

- ✓ Analyze historical maintenance records.

- ✓ Use predictive maintenance algorithms.

Data-driven decision: Schedule maintenance proactively to prevent costly breakdowns and downtime.

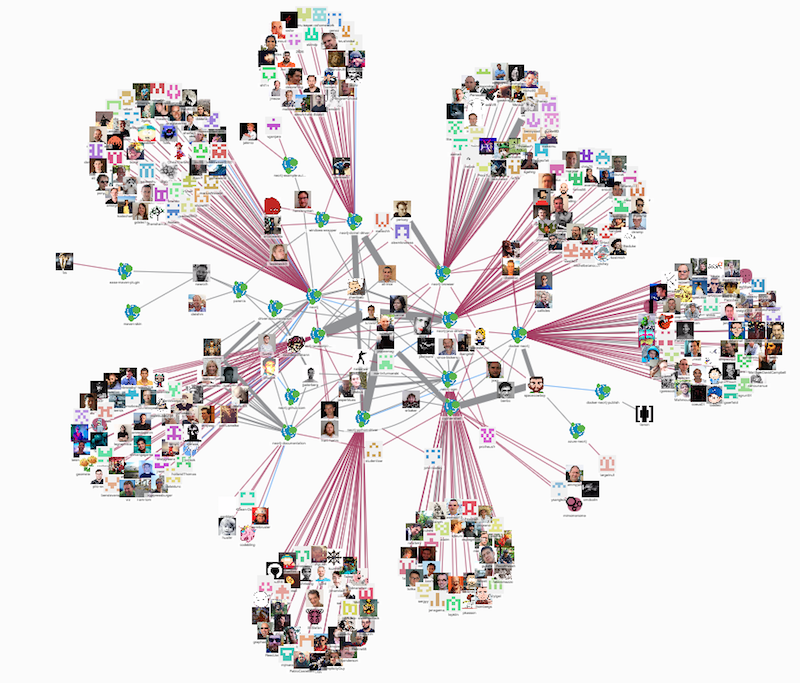

Who Are Our Most Profitable Customers?

Data Insights:

-

✓ Analyze customer lifetime value (CLV).

-

✓ Segment customers based on purchasing

behavior.

-

✓ Track engagement and retention rates.

Data-driven decision: Focus marketing efforts on high-value customer

segments to maximize profitability.

Data Insights:

- ✓ Analyze customer lifetime value (CLV).

- ✓ Segment customers based on purchasing behavior.

- ✓ Track engagement and retention rates.

Data-driven decision: Focus marketing efforts on high-value customer segments to maximize profitability.

Most / Least Bought Genres?

Using data from our book collection, we want to determine:

- ✓ Which genre has sold the most copies?

- ✓ Which genre has sold the least copies?

We can find this by analyzing the total copies sold for each genre.

This week

- Practice NoSQL Queries ✓

- Why Data? ✓

- Ingestion (Extract)

- Processing (Transform)

- Loading (Load)

How to Implement a Data Pipeline Process?

data extraction first or user interface first?

today - data extraction first to evaluate the data quality

What is Data Quality?

- Integrity: Data maintains accuracy and consistency

- Validity: Data adheres to established formats and rules

- Consistency: Data is consistent across datasets

- Accuracy: Data correctly reflects real-world conditions

- Completeness: All necessary data is included

- Timeliness: Data is available when needed; current conditions

- Uniqueness: No unnecessary duplicates; each entry is distinct

MongoDB Connection String

Use the following connection string to connect to your MongoDB cluster:

mongodb+srv://<db_username>:<db_password>@cluster0.lixbqmp.mongodb.net/

Replace <db_username> and <db_password> with your actual database credentials.

Please Create flower collection

Please Add flower documents 🌼

Find Incomplete Data

db.flowerCollection.find({

$or: [

{ fieldName: null },

{ fieldName: "" },

{ fieldName: "unset" },

{ fieldName: { $exists: false } }

]

}).count();

Find Duplicate Values

db.flowerCollection.aggregate([

{ $group: { _id: "$fieldName", count: { $sum: 1 } } },

{ $match: { count: { $gt: 1 } } }

]);

Find Invalid Field Length

db.flowerCollection.find({

$expr: { $gt: [ { $strLenCP: "$fieldName" }, 50 ] }

});

Find Outdated Data

db.flowerCollection.find({

createdAt: { $gte: new Date("2023-01-01"), $lt: new Date("2023-12-31") }

});

What else do you recommend?

This week

- Practice NoSQL Queries ✓

- Why Data? ✓

- Ingestion (Extract) ✓

- Processing (Transform) → Thursday

- Loading (Load) → Thursday

Project Part 1: Motivation

Simulate working with large data sets to explore performance optimization in NoSQL databases. Techniques include read-only flags, indexing, and dedicated object stores

Using a "Todo List" domain, this project improves data query performance and teamwork skills. Alternate domains may be used, provided they maintain the same 'todo' object structure

Project Part 1: Implementation

- Create "TodoList" IndexedDB store with 100,000 objects; display on the browser

- Assign 'completed' status to 1,000 objects, others to 'in progress'

- Time and display the reading of all 'completed' status objects

- Apply a read-only flag, remeasure read time for 'completed' tasks

- Index the 'status' field, time read operations for 'completed' tasks

- Establish "TodoListCompleted" store, move all completed tasks, and time reads

Documentation at MDN Web Docs

This week

- Practice NoSQL Queries ✓

- Why Data? ✓

- Ingestion (Extract) ✓

- Processing (Transform)

- Loading (Load)

Realtime Stocks Dashboard

NasdaqWhat makes a good dashboard?

- Clarity: Present data clearly and understandably

- Relevance: Display information for the user's needs

- Interactivity: Enable data drilling and filtering

- Consistency: Use uniform formats for comparison

- Visualization: Choose appropriate charts and graphs

- Performance: Ensure fast load times

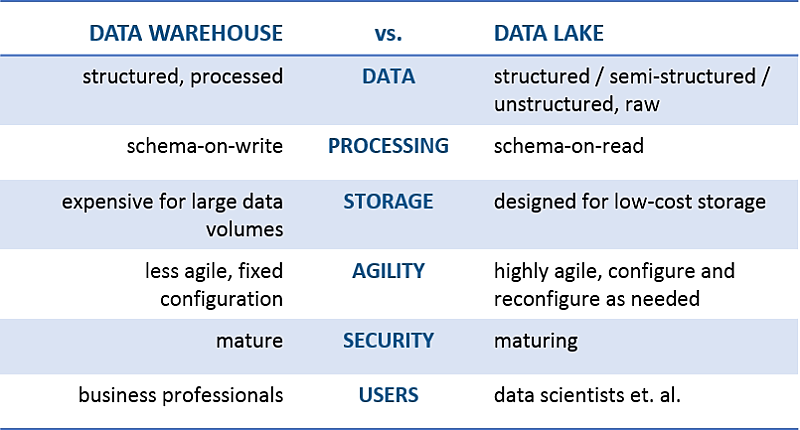

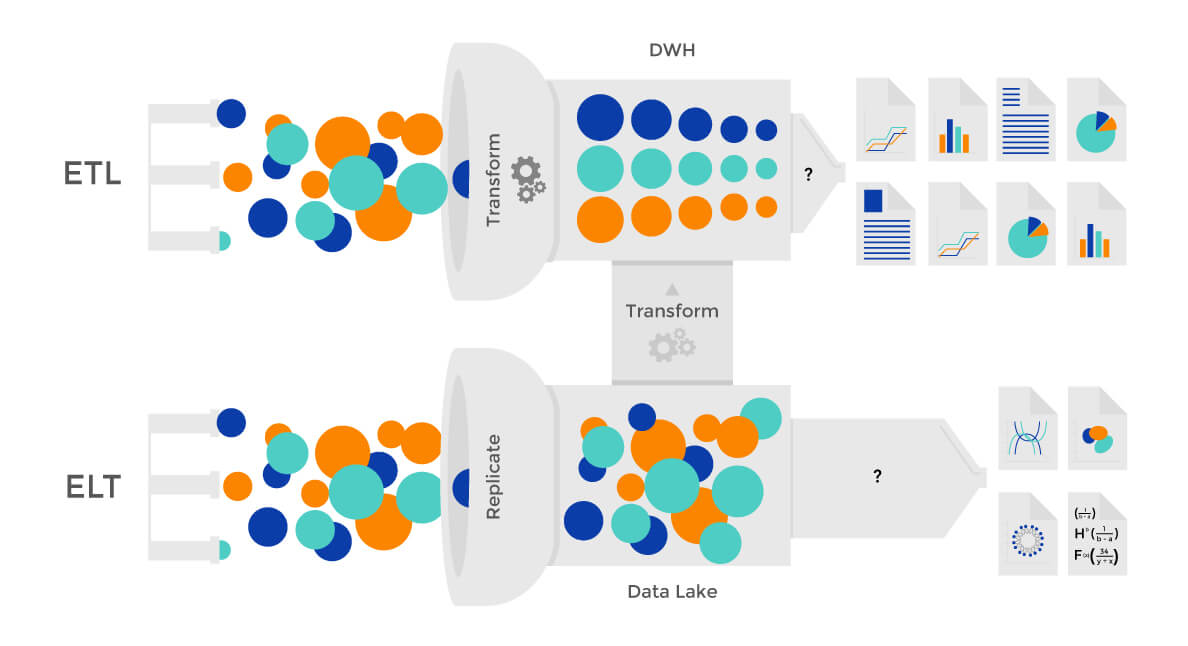

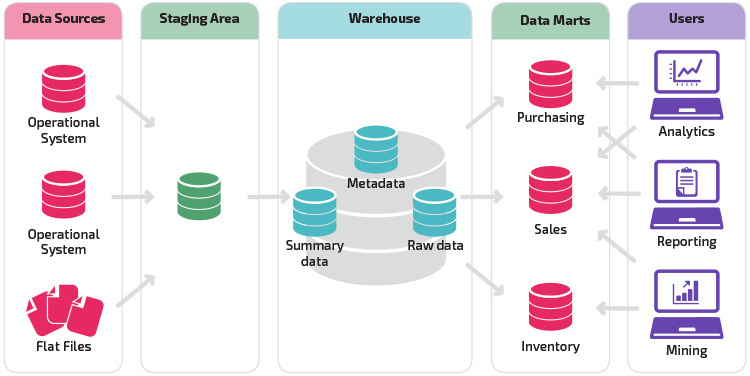

What's ETL Phase's Responsibility?

| Phase | Description | Purpose |

|---|---|---|

| Extract | Contains raw data from initial collection | Data extraction for analysis |

| Transform | Contains cleaned and transformed data. | Data transformation for quality improvement |

| Load | Contains final data ready for reporting | Data loading for final analysis and reporting |

How to Separate Each Phase's Data?

| Level | Pros | Cons |

|---|---|---|

| Single JSON Object |

|

|

| Collections |

|

|

| Databases |

|

|

| Clusters |

|

|

How to Separate Each Phase's Program?

| Aspect | Separate Programs | Single Program |

|---|---|---|

| Flexibility | High flexibility in customizing and scaling individual components | Less flexibility; changes impact the entire system |

| Complexity | Potentially complex to manage multiple systems and integrations | Single point of management, simpler integration |

| Maintenance | Requires updates and maintenance for each component separately | Centralized maintenance, easier to manage updates |

| Performance | Can optimize each component for performance but may have integration overhead | Performance may be impacted by the limitations of a single system |

| Cost | Potentially higher costs due to multiple tools and licenses | Single license and tool may reduce costs but may come with a higher upfront investment |

How to Track ETL Documents?

| Aspect | Pros | Cons | Best Practice |

|---|---|---|---|

| Data Lineage Tracking | Enables tracing data back to the source | May cause confusion if not handled properly | Include source UUID in all stages |

| Consistency Across Stages | Ensures consistency in references | Risk of data overwrite during transformation | Use unique UUIDs with original UUID reference |

| Linking Related Data | Maintains relationships throughout ETL | Can complicate aggregation and analytics | Maintain separate UUIDs for entities and stages |

| Handling Deleted Data | Ensures accurate history of entity changes | Potential for reintroduced data conflicts | Implement checks for UUID re-use and versioning |

Update Frequency by ETL Phase Across Domains

| ETL Phase | Navigation | Healthcare | Agriculture |

|---|---|---|---|

| Extract | High | Mid | Mid |

| Transform | High | High | Low |

| Load | High | Low | Mid |

What Information Should the Extract Flower Data Quality Dashboard Load?

How many flowers?

const totalDocuments = db.flowerCollection.find().count();

What are the flower keys?

// Utility function to flatten nested objects into dot notation paths

function flattenObject(obj, parent = '', res = {}) {

for (const key in obj) {

const propName = parent ? `${parent}.${key}` : key;

if (typeof obj[key] === 'object' && obj[key] !== null && !Array.isArray(obj[key])) {

flattenObject(obj[key], propName, res);

} else {

res[propName] = obj[key];

}

}

return res;

}

// Get all keys from documents

const combinedAttributes = sampleDocuments.reduce((acc, doc) => {

return { ...acc, ...flattenObject(doc) };

}, {});

const allKeys = Object.keys(combinedAttributes);

console.log('All Document Keys:', allKeys);How many incomplete flowers?

const incompleteFieldsCondition = schemaKeys.map(key => {

if (key === '_id') return {};

return typeof FlowerModel.schema.paths[key].instance === 'Number'

? { [key]: { $lte: 0 } }

: { [key]: { $in: ['', null] } };

});

const incompleteDocumentsCount = await FlowerModel.countDocuments({

$or: incompleteFieldsCondition

});

const incompletePercentage = totalDocuments > 0

? ((incompleteDocumentsCount / totalDocuments) * 100).toFixed(2)

: 0;How many duplicate values accross documents?

const duplicateField = 'name';

const duplicates = await FlowerModel.aggregate([

{ $group: { _id: `$${duplicateField}`, count: { $sum: 1 } } },

{ $match: { count: { $gt: 1 } } }

]);

const duplicateCount = duplicates.reduce((acc, curr) => acc + curr.count, 0);

How many price outliers

const outliers = db.flowerCollection.find({ price: { $gt: 1000 } }).count();What's the attribute availability?

const attributeCounts = {};

const attributeAvailability = {};

for (const key of schemaKeys) {

if (key !== '_id.buffer' && !key.startsWith('_id')) {

const count = await FlowerModel.countDocuments({

[key]: { $ne: null, $nin: ['', 0] }

});

attributeCounts[key] = count;

attributeAvailability[key] = {

count: count,

percentage: totalDocuments > 0 ? ((count / totalDocuments) * 100).toFixed(2) : 0

};

}

}

attributeCounts['_id'] = await FlowerModel.countDocuments({ '_id': { $exists: true } });

attributeAvailability['_id'] = {

count: attributeCounts['_id'],

percentage: totalDocuments > 0 ? ((attributeCounts['_id'] / totalDocuments) * 100).toFixed(2) : 100

};

const sortedAttributes = Object.entries(attributeAvailability)

.sort(([a], [b]) => a.localeCompare(b));

Transforming Missing Values: JavaScript Code

const transformMissingValues = (data) => {

return data.map(record => {

for (const key in record) {

if (record[key] === null || record[key] === undefined) {

record[key] = 'Default Value'; // or use an imputation method

}

}

return record;

});

};

Transforming Missing Values: MongoDB Query

db.collection.updateMany(

{ "fieldName": { $exists: false } },

{ $set: { "fieldName": "Default Value" } }

);

Transforming Duplicates: JavaScript Code

const transformDuplicates = (data) => {

const uniqueData = [];

const seen = new Set();

data.forEach(record => {

const key = JSON.stringify(record); // or use a unique identifier

if (!seen.has(key)) {

seen.add(key);

uniqueData.push(record);

}

});

return uniqueData;

};

Transforming Duplicates: MongoDB Query

db.collection.aggregate([

{ $group: { _id: "$fieldName", count: { $sum: 1 } } },

{ $match: { count: { $gt: 1 } } },

{ $project: { _id: 0, fieldName: "$_id" } }

]).forEach(doc => {

db.collection.deleteMany({ fieldName: doc.fieldName });

});

Transforming Data Formats: JavaScript Code

const transformFormats = (data) => {

return data.map(record => {

record.date = new Date(record.date).toISOString(); // Standardize date format

record.text = record.text.toUpperCase(); // Standardize text capitalization

return record;

});

};

Transforming Data Formats: MongoDB Query

db.collection.updateMany(

{},

[

{ $set: { date: { $dateToString: { format: "%Y-%m-%d", date: "$date" } } } },

{ $set: { text: { $toUpper: "$text" } } }

]

);

Transforming Outliers: JavaScript Code

const transformOutliers = (data) => {

const threshold = 1000; // Example threshold

return data.filter(record => record.value <= threshold);

};

Transforming Outliers: MongoDB Query

db.collection.deleteMany({ value: { $gt: 1000 } });

Transforming Key Case Sensitivity: JavaScript Code

const transformKeyCases = (data) => {

return data.map(record => {

const normalizedRecord = {};

for (const key in record) {

const normalizedKey = key.toLowerCase();

normalizedRecord[normalizedKey] = record[key];

}

return normalizedRecord;

});

};

Transforming Key Case Sensitivity: MongoDB Query

db.collection.find().forEach(doc => {

const normalizedDoc = {};

for (const key in doc) {

normalizedDoc[key.toLowerCase()] = doc[key];

}

db.collection.updateOne({ _id: doc._id }, { $set: normalizedDoc });

});

Healthcare Patient Records

- Extract: What types of patient data to extract?

- Transform: How to maintain quality and compatibility?

- Load: Efficiently load data into the healthcare system?

Agriculture Crop Management

- Extract: What crop management data to extract?

- Transform: Transform sensor readings and aggregate data?

- Load: Load data into the farm management system?

E-Commerce Product Catalog

- Extract: What data sources are needed for the catalog?

- Transform: How to ensure data consistency and completeness?

- Load: Best approach to load data into the database?

Questions on Data Processing and ETL?

Project Part 1: Implementation

- Create "TodoList" IndexedDB store with 100,000 objects; display on the browser

- Assign 'completed' status to 1,000 objects, others to 'in progress'

- Time and display the reading of all 'completed' status objects

- Apply a read-only flag, remeasure read time for 'completed' tasks

- Index the 'status' field, time read operations for 'completed' tasks

- Establish "TodoListCompleted" store, move all completed tasks, and time reads

Documentation at MDN Web Docs

Review Homework 1

| Question | Answer |

|---|---|

| What is a key feature of unstructured data in NoSQL databases? | Data can be stored without a fixed schema |